A helpful way to organizing your growing collection of unit tests, how interfacing with LLMs just got easier in the R ecosystem, and a clever use of AI to summarize a large collection of blog posts.

Episode Links

Episode Links

- This week's curator: Eric Nantz: @rpodcast@podcastindex.social (Mastodon) and @theRcast (X/Twitter)

- Nested unit tests with testthat

- shinychat: Chat UI component for Shiny for R

- Creating post summary with AI from Hugging Face

- Entire issue available at rweekly.org/2024-W42

- elmer: Call LLM APIs from R https://hadley.github.io/elmer/

- Joe Cheng's sidebot app (R edition) https://github.com/jcheng5/r-sidebot

- EDA Reimagined in R: GWalkR + DuckDB for Lightning-Fast Visualizations https://medium.com/@bruceyu0416/eda-reimagined-in-r-gwalkr-duckdb-for-lightning-fast-visualizations-05b011e8ae39

- Apache superset https://superset.apache.org/

- Postprocessing is coming to tidymodels https://www.tidyverse.org/blog/2024/10/postprocessing-preview/

- Use the contact page at https://serve.podhome.fm/custompage/r-weekly-highlights/contact to send us your feedback

- R-Weekly Highlights on the Podcastindex.org - You can send a boost into the show directly in the Podcast Index. First, top-up with Alby, and then head over to the R-Weekly Highlights podcast entry on the index.

- A new way to think about value: https://value4value.info

- Get in touch with us on social media

- Eric Nantz: @rpodcast@podcastindex.social (Mastodon) and @theRcast (X/Twitter)

- Mike Thomas: @mike_thomas@fosstodon.org (Mastodon) and @mike_ketchbrook (X/Twitter)

- Pachelbel's Ganon - The Legend of Zelda: Ocarina of Time - djpretzel - https://ocremix.org/remix/OCR00753

- Voodoo, Roots 'n Grog - The Secret of Monkey Island - Alex Jones, Diggi Dis - https://ocremix.org/remix/OCR02180

[00:00:03]

Eric Nantz:

Hello, friends. We're back up so 182 of the R Weekly Highlights podcast. This is the usually weekly show where we talk about the latest happenings that are highlighted in every single week's, our weekly issue. Now we were off last week because yours truly did have a a bit of a vacation he forgot about until after recording last week with my kids being off for fall break. But nonetheless, I am back here. My name is Eric Nanson, and as always, I am delighted that you join us wherever you are around the world. And, yeah, fall is in the air as, my co host can see I'm wearing my one of my our hoodies here because it is a little chilly here in Midwest. But, of course, I gotta bring him in now. I also my host, Mike Thomas. Mike, are you, experiencing the chill there too? It is a little bit chilly here, Eric. Not gonna lie. My office, for whatever reason, seems to be the coldest

[00:00:52] Mike Thomas:

room in the house. I don't have great zoneage set up here. So been trying to, put the sweatshirts on and off in between teams calls and get the space heater out, but it's 'tis the season.

[00:01:05] Eric Nantz:

Yeah. You know what's a great space heater? A really beefy server like the one right next to me. No. That's a good idea. Yeah. This one's not too bad, but if you go behind it, yeah, you can more up your hands hands a little bit. But, I I will mention in our week off, probably one of the funniest things I did was we had gone to a place here in Indiana about a bit south of where I met called Blue Spring Caverns, where basically you can go about 200 feet below the surface into literally this set of caves with a waterfall, and we had this fun little boat tour.

And as you get deeper in this, with the tour guy turning his his fancy light off, pitch black, like, you can't see anything. And then if you are completely silent, it is completely silent. Like, it is the closest I've ever gone to a place where I'm literally away from every single thing imaginable. But it it was a good time. You don't wanna be stuck there because you could you know, hypothermia could happen. Luckily, it was only about 40 minute tour, but, yeah, that was, that was kind of like a meditative type experience. So that that was a highlight for me. Very cool. Yep. So I made it back in one piece, and luckily, my kids didn't cause too much trouble or try to tip over boats or anything. So that was a win too. They they were not alone. There were other kids on that, but, of course and when the tour guide said, okay. Let's try this whole silence thing. In about 10 seconds, one of my kids just blurts out laughing. I'm like, gosh. Naturally. Yeah.

[00:02:38] Mike Thomas:

Surprised it lasted 10 seconds.

[00:02:41] Eric Nantz:

Yeah. Yeah. Me too. Yeah. Because I I could've been a lot worse. But, nonetheless, yeah, we had a lot of fun. Good time on the on the fall break, but I'm happy to be back with you. Tell him about the latest r w two issue. And, yeah, guess what? Just serendipitously, the timing worked out that I was a curator for this past issue. So that was a late night, Friday Saturday session to get this together. But, luckily, I think we got a awesome issue to talk about here, but I can never do this without the tremendous help from our fellow Rwicky team members and contributors like all of you around the role of your awesome poll requests and suggestions, which I was always very happy to merge into this week's issue. So first up in our highlights roundup today, we are talking about a pattern that as a package developer or an application developer, you definitely wanna get into the pattern of building unit tests in your package and and application because future you will thank you immensely for building that test to figure out any regressions in your code base, figuring out that new feature, making sure that you've got a good set of rules to follow for assessing its fluidity and quality and whatnot.

Well, as you can imagine, Mike, you can speak from experience on this too. As your package or application gets larger, start building more tests, more tests. Yes. Even more tests. This could be spread into, like, massive set of r scripts in your test that folder or whatnot. And you may be wondering, yeah, what's, what are some best practices for just keeping track of everything or keeping things organized? Well, I was delighted to see that, in our first highlight here, we have this terrific post by Roman Paul, who is a statistical software developer in the GSK vaccines division. On his blog, he talks about a neat concept called nested unit test with test that.

And this was something that I probably thought was possible. I never really tried it out. So let's break this down a little bit here. So as usual, he starts off with a nice example here with, in this case, a simple function for adding two numbers. Of course, that's one of the most basic ones, but that's not the point here. But as you develop, you know, thinking about what are the best tests for a function like this, you might come up with more than 1, like in the first part of the example here where he has a testing of whether the addition of 21+2 is indeed equal to 3.

But you may also want to build in tests for, like, error checking, such as detecting if an error occurs if one of the inputs like a and b are not numerics. And now you've built 2 tests back to back in your script. Now they they, in essence, even though they're separate script they're separate test that calls, they're still using the same function under the hood that they're actually testing. So naturally, what he goes do next is that you can actually bundle both of these tests inside an overall test, might call a wrapper test. He simply calls it ad. But within this, there is, a little trick that we'll get to in a little bit, but he's basically nested in those 2 separate test that calls for the baseline functionality and then testing those input values inside this overall test. Now there is a trick to do if you've tried this before and maybe gotten some, you know, warnings from test stat about, you know, a a, testing or an empty test.

He has, at the beginning of this overall block, a call to expect true whether a function exists, called add. And that that apparently will suppress any warnings about an empty test. That was new to me as a little trick there. So this this paradigm works great for, again, this 2 test case. But now in the rest of the posties talks about why would you wanna invest in this instead of just having all these as separate on top of just in the more cleaner organization. But there may be cases whereas you're building a larger code base of tests, you might want an easy way to skip your tests occasionally as you're iterating through development.

So in the next example, always added as a line after the expect true of skipping all the remaining tests below it with a a simple call to skip. So in that way, if you know you're breaking stuff and you don't wanna quite test again, and you just throw that skip in there, keep iterating, and take that skip out, and then it'll just go ahead and run those tests again just like it would before. And then there are some additional benefits you can have there but takes advantage of a couple different concepts that are familiar to an R developer. One of those is having a little shortcut, if you will, for that function that you're testing.

Maybe it has a long function name and you just wanna do a simple letter for it for the purposes of your test. So he gives this add function an assignment to the letter f, and then simply he's able to use that in all the expect equal or the expect error calls going forward. Again, that might be helpful if you have a pretty verbose function name. And then another one is taking advantage of much like in functions themselves, they have their own scoping rules where you might have then we might wanna reuse different parts of this test scope that may be more self contained for this group of tests, maybe not for remaining tests that are outside of this block, you can take advantage of having function having objects inside this overall wrapper, you know, test that that's going that's the outer layer. And then those can feed directly into those sub test stack calls much like you would with nested functions. That's another interesting approach if you wanna cleanly organize things about duplicating these objects in each test stack call of of objects that are related to each other.

Again, it will depend on your complexity to see just how far you you take this. But, yeah, the possibilities you you can go many different additional directions with this. And in the last example, he talks about how you can group the unit tests by maybe some additional criteria. Perhaps it's based on the type of input data. Maybe it's based on another factor. In this case, he's got ways of grouping it based on whatever he's testing for positive or negative numbers. Interesting functionality there that you can build and play. But in the end, the key part I take away from this is that the test that function is pretty open ended after all. Once you know kind of the the quirks to get around that empty warning, you could do some pretty interesting organization in terms of nesting related tests together, sharing objects between them, and overall having a slightly cleaner code base for your your what could be a massive amount of tests, which is I'm just releasing an app in production this week. I have a lot of business logic tests, so this may be something I have to look into for the future as well. So very night fit nice, fit for purpose post here, and I'll definitely take some learnings from this in my next, business logic testing adventures.

[00:10:27] Mike Thomas:

Yeah. Me as well, Eric. This is really interesting, and like you, it's not something that I had tried before. You know, what we're talking about here is a test that call inside another test that call. You know, not just having multiple expect type calls in the same test that block, which I'm I'm sure folks who have written unit tests before are familiar with, hopefully. Otherwise, your your, your code would be quite lengthy. And and one of the ways that I learned to to write unit tests was to look at the source code of some of my favorite r package developers, primarily Max Kuhn and Julia Silgy.

Because unit tests are are kind of interesting, you know, they're they're very different than a lot of the other R code that you'll write. And, they sort of execute in their own environment as well, which makes some of this stuff a little tricky. I learned this the hard way, you know, trying to download a file inside my testing script and handling, you know, the creation of a variable within a test that block that maybe doesn't exist in the next test that block. And as an r package developer, and I I know, Eric, you share a lot of these same sentiments, you can quickly become sort of obsessed with organization of your code, and I think testing is another place that that applies to.

And these concepts, I think, are really useful from an organization standpoint. I feel like I need to go go right back to some of the r packages that we have right now and take a look because I know that there are opportunities to improve, you know, leveraging what Roman's written about here, you know, particularly in some of the the lower code code blocks in this blog post, underneath local scoping where you're developing some variables within this outer test that call, that you're going to use in some of these inner test that calls that I believe will really just make, you know, some of the the code, run quicker and more lightweight instead of defining, like, global variables at the top of your script that are going to stay there for the remainder of all of the tests being executed.

These can kind of be local to just this particular outer test that chunk. So really interesting. I feel like it's kind of a tough blog post to do justice without actually taking a look at the code and reading through it, but it should click really quickly if you take the time. And it's a pretty short and sweet concise blog post here, to read through it. But, another takeaway for me was that I did not know about the skip function from test that, which skips all of the tests below the skip call in that same test that block. So it'll execute the ones above it, but, you know, the all of the ones below it within that same test that block, as far as I understand, will be skipped, which is is really interesting if you are in the the middle of development, you know, if something's failing and you you need to just sort of, make sure that you're able to lock down, what you understand and then, you know, re execute the the tests that are firing so that you can, you know, work on the bug fixes in a safe and efficient environment. So really, really helpful blog post here, some concepts that I had never honestly seen talked about before or used, that I think are really, really applicable to anybody who's developing in our package or maintaining in our package out there.

[00:13:51] Eric Nantz:

Yeah. That skip function was, underrated gem that I dived into a little bit before this only because of necessity. You may find yourself in a situation, we're gonna get in the weeds on this one, where for my app I'm releasing to production, I had a different set of tests that were more akin to operating in our internal environment and our Linux environment versus when I would run it in GitHub actions, that was technically a different environment. And so I had to skip certain things if it was running on GitHub actions that were more, I'm gonna be blunt here, very crude hacks to get around issues with our current infrastructure for the tests that were appropriate for internal use. So, yeah, the skip there are variants of the skip function as well. There's like a skip if CRAN type function, which is a wrapper that detects if this is running on the CRAN server or not. So it won't run those tests where maybe you wanna run them in development, but you don't care as much for the actual CRAN release of a package. Again, there would be different use cases for these. So, yeah, you're invited to check out the the test that vignettes. There's a lot of great documentation there. But this concept that's been covered here, has not been mentioned in the vignettes of test that before. So I'm really thankful to Roman for putting this together for us because I'm gonna bookmark it and test that related resources to use going forward.

Doesn't it seem like yesterday, Mike, when we were sitting near each other at deposit conf, listening to Joe Cheng's, very enlightening talk about

[00:15:40] Mike Thomas:

using AI chatbots and Shiny. Don't you remember, my friend? Oh, I remember it like it was yesterday, Eric. I was gripping onto the edge of my seat.

[00:15:49] Eric Nantz:

Yes. And I I teased you a little bit before that because I had an inside preview. But, nonetheless, I was still blown away when you actually see it in action. And while I hope the recordings will be out fairly soon, once they are out there, you'll wanna check it out, for you and the audience because Joe Chang demonstrated a very interesting Shiny application. At that time, he was using Shiny for Python that had a, in essence, a chat like interface on the left hand margin, and then it was visualizing through a few different, like, summary tables and visualizations, a restaurant, tipping dataset.

But when Joe would write an intelligent kind of question in the prompt saying, show me the the tip summaries for those that are males, Instead of being a shiny app developer trying to prospectively build all these filters in yourself, the the chatbot was smart enough to do the querying itself, and it rerendered the app on the fly. It was amazing. Many in the audience were amazed. Mike and I, of course, are looking at each other. It's like, yep. We wanna use this.

[00:16:59] Mike Thomas:

Yep. We've been skeptical about AI. Have some harsh feelings for it, at some points in time, but, yeah, we wanna use this. I agree.

[00:17:09] Eric Nantz:

So as I mentioned, that was using Shiny for Python, and he had mentioned that the R support was coming soon. Well, that soon is now, and you might call this an inside scoop, so to speak, because while we're talking about is public, it hasn't really been publicly announced yet by time deposit team, so you get to hear it here first. But there is a new package out there, by Joe Chang himself called shiny chat. This is basically giving you as a Shiny developer a way to build in in a module like fashion the chat user interface component inside your Shiny apps. And it indeed on the tin works as advertised where you can call a simple function to render this chat interface in very similar to, like, a chat UI call.

We have a little server size stuff we'll talk about shortly, and then your app will have a very familiar looking chat interface that you can type queries in and get results back. There are some interesting things under the hood here that respect the Shiny itself and giving you that that chat like experience that you see in the external services with some clever uses of synchronous and asynchronous processing under the hood. There is example in the in the GitHub for the package that you can take a look at as well. But there is there is another component to this, Mike, that ShinyChat on its own doesn't do all the heavy lifting much like, you know, robust Shiny development. We always say that for heavy lifting of, like, server processing or business logic, you wanna put that into its own dedicated set of functions or packages.

So hand in hand with shiny chat, we will put a link to this in the show notes, is a new package that's being authored by Hadley Wickham called Elmer. Elmer is in our package to interface directly with many of the external AI services that we've been using, for a while now, such as, of course, OpenAI. There's been now support for Google Gemini. There's support for Claude and support for all WAVA models if you've had those deployed on an API like service. So Elmer is actually the way that Shiny chat from the server side is going to communicate directly with this AI service, and this is where you feed in any customizations to the prompt before the interface is loaded, which is something I've been learning about, recently, prompt engineering.

So Elmer will give you a way to feed that in directly, And then you can also take advantage of, of course, the responses being returned to you, and you can do whatever you want with those. But the real nugget here, and, again, this is actually how that restaurant tipping app works under the hood, is in addition to the prompt being sent to this chat interface, you are able to select what are called tools for this. Now what is a tool in this case? We're not talking about, like, a, you know, tool in the traditional sense. You are letting the AI take advantage of, say, a function or maybe functions from another package to help assist you with taking the result from that initial query or or that message you send to the chatbot and maybe calling something on your behalf to help finish off the process.

So an example I can illustrate here is if you ask a chatbot, hey, how long ago was, say, the declaration of independence written? Guess what? Due to the stateless nature of these chatbots, it doesn't really know what time it is for you right now when you call that. It may try to guess that you're calling it in October or whatnot, but it's not gonna really know that answer. So how to get around this is you can help feed in via this tool argument in Elmer when you set up the chat interface, a function that it can call to help answer these kind of questions.

This opens up the possibilities to help the AI bot get answers to things that through its, you know, training that it's done externally or internally, depending on how you're using it, would not be able to answer on its own. This took this taken me a little bit to get used to, but that time example was one thing that kinda clicked. But that, in theory, is what's happening with this restaurant tipping app that we saw at Pazitconf where Joe built in a tool, I. E. A function to basically execute that what became a SQL query as a result from that question that we asked it. And then it would execute it on the source data that was fed into the app. It was set as a reactive doom. Every output is refreshed in real time after that query is executed.

And that is something that is amazing to me that now we can give the chatbot a little more flexibility. But just like if I was training, like, a a new colleague to get used to one of my tools or one of my packages, You've gotta be pretty smart with it or or I should say be helpful with it. We have a function. What do we talk about? Have good documentation. Have good documentation of the parameters, the expected outputs. That is going to be necessary for Elmer to take that that source of that function or that function call and then help assist you with the syntax you need to feed this in as a tool to the AI to the AI service.

So there is a lot to get used to here, but we'll put a link in the show notes to, again, the the restaurant tipping examples. You can see this in action. But now the combination of Shiny chat and Elmer, we can start building these functionalities into our Shiny apps that are written in r, and we are always scratching the surface here. This stuff I wanna stress is an active development. These are not on CRAN yet, so there could be features that are breaking changes or whatnot. So be wary of that. But they are very actively working on this as we speak, and I'm excited to see what the future holds. And my my creative wheels have already been turning about ways that I can leverage this at my in my day job as this gets

[00:23:47] Mike Thomas:

gets more mature. Yeah. Eric, I mean, you you summarized it very well. I think that presentation by Joe really blew a lot of us away. I think it, created a lot of opportunity, probably a lot of of work for us, right, to to be able to try to integrate these things, especially if any of our bosses saw that presentation, to try to integrate this type of functionality and user interface and, you know, capabilities into our Shiny apps to, you know, I I think not only reduce the number of filters maybe that we need to create, but also sort of allow this sandbox environment for users to play around with the data that we're displaying in our apps such that we don't necessarily have to worry about boiling the ocean for them anymore and, you know, being able to to handle every possible little edge case that anybody could ever want to see, before we just yell at them and tell them to learn how to write SQL against the database. Right? Not that I've ever been there before. Oh, never.

But it it it's very interesting how a lot of this tooling by posit seems to be, you know, sort of quietly taking place behind the scenes. But, obviously, you know, I think this Elmer package is doing a lot of the heavy lifting, and it has a great package down site if you haven't taken a look at it yet. And this tool calling Vignette is is a fantastic introduction to to sort of how that works and how we'll be able to I don't know if customize is is the right word, but really be able to nicely integrate these large language model capabilities into our our workflows, right, and not have as much of a disconnect as I would have imagined there to be at the beginning. So it it's pretty incredible that we we do have this tooling. You know, I think as as Joe said, there are a lot of considerations that you need to take around, you know, potential safety to ensure that these large language models aren't actually getting direct access to the data in your app and ideally just executing a a or the models are returning a a query maybe based on the schema of your data without ever actually seeing, you know, your data itself in the case of, you know, that tipping example where the the model is really mostly returning a SQL query, essentially, that you can leverage to execute against, you know, your underlying data source, which, in my opinion, you know, those are the situations unlike this, you know, this this time example is interesting.

But I think, you know, the the former that I was talking about are are probably the most useful, as in terms of low hanging fruit that I can see applications of these large language models into Shiny apps. So it's really interesting to see all of this start to come together. Obviously, we saw some of this on the Python side first and and really excited that, the ecosystem on the the R side is expanding, just as well. So lots to see here already. I can imagine only more to come. And you, I I believe, Eric, had the opportunity to participate in a little hackathon around this stuff. I haven't gotten my hands quite as dirty yet, but that is really, really, on the forefront of what we're we're trying to do,

[00:27:16] Eric Nantz:

at the day job here. So, hopefully, I'll be able to report back on on some of our findings pretty soon. Yeah. That was, very, very fortunate that I I got the invitation to see some of the preview versions of these interfaces, and, you know, I wouldn't turn that down for anything. And, Mike, though, as we talked in the outset, I have been resistant is kind of a strong word, but it has been very not not very tempting for me to adopt a lot of this yet in my daily work. But I think with the right right frame of mind in this and the right use cases, this can be a massive help. I think as long as we have a way, especially in my industry, to only let it access what it needs to access, but yet this tooling functionality gives us a way to then act as if maybe the AI did get us 80% of the way there, and then we would have to manually do the rest of the 20%.

We're basically giving them a way to get to that 20%, but still run it in our local environment to do it. It's not going back to them to run it. That that is or to get the results back itself, so to speak. It's acting on my behalf. That's a nuance I'm still kinda wrestling with. It's still something that seems a little magical to me, but I think what we'll have a link to is the r version of this side restaurant app example in the show notes as well. The key another key part of this along with the tools supplying the tool function is the prompt itself. So in the example we'll link to, Joe has put together a markdown document that is the prompt that's being sent to it. Basically, it's reading the entire line this markdown file where it's very verbose with the AI, you know, the AI bot about what it has access to, what is expected to do, what it should not do, and then making sure that it doesn't go outside those confines.

It's interesting to see just what the nuances would be when you try this on different back ends, so to speak. Because I have heard that, yeah, things like OpenAI, this works really great almost a 100% of the time. There may be other cases where a self hosted model isn't quite there yet, but that's just the nature of this business. Right? Some of these models are gonna be more advanced than others because of the how many billings of parameters that are used to train the things. So there's a lot of experimentation, I think, is necessary to figure out what is best for your particular use case, but you get the choice here. That was one of the things I was harping on when I first heard about this is I don't wanna be confined to just one model type. I wanna or one service. I wanna be able to supply that as the open source versions do get more advanced. So there's the responsibility aspect is still very much at play for what I'm looking at.

But the fact that now this is our disposal about me having to tap into and again, no no shade to our friends in Python, but I've heard that the langchain framework can be a bit hairy to get into, and Elmer is really trying to be that very friendly interface on top of all this about me having to engineer it all myself. So I'm super impressed that with about 4 hours of work I did in this hackathon to build an extension of this restaurant type example with podcast data, I was able to get to even about, like, 85% of the way there in that amount of time.

I'm telling you, when I picked up Shiny, it took me well more than 4 hours to get to where I wanted to get to initially. So I I'm very intrigued by what we can accomplish here. Well, we're going to stay on the AI train for a little bit here, Mike, in our last highlight here, because one of the things that has been one of the more, I might say, low hanging fruit for many use cases across different industries and different sectors is being able to ingest maybe more verbose documentation or more verbose, sources online and generate a quick summary of that.

We're seeing this in action even with some, you know, external vendors. Those of you that live in the Microsoft Teams ecosystem probably know that there are things like a chat copilot for meeting summaries, which in Middle East can sometimes be a bit odd when their results come through, but that's that's that's all happening. That's all happening here. Well, imagine you have a lot of resources at your disposal, and maybe you could take the time to go through each of these. Maybe they're a blog, and you just summarize them quickly for maybe sharing on social media or sharing with your colleagues. But is there a better way? Well, better or not, no. It's up to you to decide. But our last highlight here, we've got a great interesting use case from Athanasia Mowinkle, who has been on the highlights quite a bit in the past, talking about her adventures of creating summaries of posts from her blog with artificial intelligence surfaced via Hugging Face.

So as I mentioned, her her, motivation here was she's got a lot of posts on her blog, and it's a terrific blog. You should definitely subscribe to that if you haven't already. And she wanted to see what she could do to help get these summaries for additional metadata to be supplied in, and he she saw a colleague of hers that was using OpenAI's API to create summaries of his blog, and she wanted to, you know, again get away from the confines of Python on this. She wanted to give Hugging Face a try by calling it with directly an R. The rest of the post talks about, okay, first getting set up with a hugging face API, which again, you know, pretty straightforward.

Having getting an API key, which is pretty familiar to any of you that have called web services from r in the past. And then she is going to leverage a very powerful ACTR 2 package to build in a request to Hugging Face to speed a set of inputs and then doing the request, you know, the request, summarization or the request parameters. It's a lot of a lot of, like, low level stuff, but once you get the hang of it, it works, which by the way, that Elmer package we were just talking about is wrapping HTTR 2 under the hood to help with these requests of these different API services. So maybe in the future, that will get Hugging Face support. But nonetheless, she has a nice tidy example here of using you know, making a request to, feed in, I believe, some summary text and then preparing that for a summarization afterwards where she's got a a custom function to grab the front matter of her post, determining if it needs a summary or not, and if it does need it to basically now call to this custom function for the API to grab that content back and then to summarize that into a file so that it can go back into the markdown front matter of her post.

We've actually covered her explorations of adapting front matter to her post in a previous highlight, I wanna say, about a month ago. So this is very much using those similar techniques. So go check that episode out if you want more context to what's happening in these functions, but she's reusing a function that add the con the post summary to the front matter and then being able to write that out as a pretty nice nice and tidy process. So in the end, she links to the entire the entire collection of functions. It's about 7 or 8 functions here. Not not too shabby. It's a great way to learn under the hood of what it's like to get results from these API services like Hugging Face, and you could use the same technique with OpenAI as well from the r side if you wanted to.

But this is this is really going under the hood of what now I believe the Elmer package is gonna try and wrap for you in a nice concise way. But for me, sometimes the best way to learn is to sometimes brute force this myself and then be able to take advantage of the convenience, but knowing what that abstraction that, in this case, Elmer would bring you is really doing under the hood. So it's a really great learning journey that she's she's authored here and then she seems pretty satisfied with the summarizations that she's able to get here. But Hugging Face does give you an interesting approach to feed in a model of your choosing to that to your account and then be able to call that via an API.

So I believe you can feed in things like llama models and other ones there as well. I haven't played with it myself yet, but it's another intriguing way to take a set of, in this case, publicly available content via her blog post, grab some interesting summaries, feed that back into her post, front matter so that her blog can show these summaries very quickly without her having to manually craft this every step of the way. So again, great examples of h t t r two in action and Waze and her examples talking about the custom functions that she's done to grab these posts, grab the content, put in the summary from the API service from hugging face back into the front matter, and then rerender the site. So there's a lot of a lot of interesting nuggets at play here. And, again, great use of engineering with the new tools available to us to make your, crafting of these summaries a lot easier, which I might have to do for our very podcast here, may Mike, maybe I don't have to write my summaries anymore. Hey. That's not the worst idea. And you know what?

[00:37:22] Mike Thomas:

I don't, you know, I don't know if this is sad or a good thing, but I feel like large language models because they're trained on the Internet in terms of, like, SEO or or and and summarizing some text, you know, so that it's going to look the best online. I gotta hand it to them. They're probably better at that than I am in terms of trying to boost the SEO for for my own website. So I should probably take a page out of Athanasios blog post here and, try that myself. You know, one of the the things that just always, you know, makes me happy, I guess, is looking at h t t r two code for building these, API requests. You know, we don't have to write 4 loops anymore for retrying an API if it if it fails the first time. We have our request underscore retry function. There's this request cache function, to be able to build a cache within, your your API request, which is really fantastic, and it's just this really nice pipe syntax to build this up. It looks so clean, compared to some of the crazy stuff that I used to do to try to call, APIs in a a neat manner.

And it looks like, you you know, again, the Hugging Face ecosystem is one that I should know more about. I don't, unfortunately, but upon some research here, it looks like there's there's probably a lot of different text summarization models that, Athanasia could have chosen from. The one, it looks like that, she leveraged here is called Distilbart CNN, assuming that's for convolutional neural network 126. I think there's a lot of different trade offs in terms of, you know, the the size of these models that you can use. I don't know what the pricing looks like for Hugging Face, in terms of, you know, how many requests you can make to a particular, you know, model endpoint, before you start getting charged or how much it costs, you know. And I imagine that the the larger size models, which are the better performing ones, probably cost more than the smaller size models, but that's just something to watch out for if you are, you know, taking the time to try to recreate Athanasi's blog post or leverage, her work here to be able to do this for your own purposes.

But, you know, as you mentioned, Eric, the ability to integrate your model of your own choosing, into this function, I think, is a really nice feature in the way that she's laid out this ad summary, function here at the end of the blog post that sort of, encompasses everything, you know, that we're doing in this workflow and being able to choose between some of these more closed source models versus some of the more open source models, like, llama, I think is is really a neat thing that we have at our fingertips. So fantastic, blog posts here that she's put together. You know, love the code. Love the way that she formats her code. I think it's really legible.

And I guarantee that I will have a use case where I'll need to probably ask you, hey. What was that blog post that, was on the highlights, you know, a few months ago, when it comes time for me to try out this exact same type of thing for my own purposes?

[00:40:40] Eric Nantz:

Yeah. And there's there's other nuances here, again, tying back to what we talked about recently here. What I noticed as she mentions as she was preparing the the the post content to go to the the AI service on Hugging Face is as she mentioned that she had a little gotcha when she kept it in the YAML structure that the results weren't quite making sense coming back. And this is one thing that it's taking my old timer brain a bit much getting used to. When you feed in this information for it to digest, it can be as if you just wrote it in plain text, basically. Like, you can treat it. Like, even with a prompt example from the restaurant app, that's as if, like, you and me just took, like, a half hour to write maybe to a colleague what we wanted them to do. It's kinda like instructions for a task.

Like, it it's I'm just so I don't know. I have this, like, muscle memory of, like, I have to do things so structured or it's gotta be in this syntax of YAML or JSON and whatnot. These prompts are just like plain text stuff. It's like it doesn't make sense how it can parse it all out. But, again, I'm just not giving it enough maybe credit under the hood to ingest, like you said, these online resources and to be able to do what it needs to do even just with somebody banging at a keyboard to write this out for a half hour or so. So she's got, again, a convenience function to strip all that YAML stuff out of the post content so that it can just go directly in. It's it's just amazing just what they're capable of. And, yeah, I I realize that sometimes I can sound too optimistic on this show sometimes, so I'm gonna have to play with this myself with some of the sources I make. But, spoiler alert, the podcast service that we do use does give me a plug in to execute to generate summaries for me. So maybe I just have to switch that bit on, but I imagine it's doing something like this under the hood. Very interesting. No. I'd like to see how it does that.

In this, for for the rest of this issue. So we'll take a couple of minutes for our additional finds here. And certainly, in the world of exploratory data analysis, especially if you've been in industry as long as Mike and I have or even if you're new to it, you know that in terms of business intelligence and, you know, looking at data, there are some established players in this space, IE Tableau and Power BI, which give you that yeah. Yeah. And we're we're doing the, the thumbs down from us on the video here. But, it can be hard to crack that nugget if you're an R user and you still want a way to quickly build these, you know, great shiny dashboards, but, a way to get started.

Well, I am I'm intrigued by this this one I'm gonna mention here by Bruce Yu in his post called EDA reimagine in r with the gwalker package plus duckdb for lightning fast visualizations. And so, basically, what gwalker does, which I'm gonna have to dig into some more, is it takes a data frame or a data or a database, and it's able to translate into a drag and drop type UI interface that will look very familiar to those in the Tableau and Power BI ecosystem. But then the user that's consuming this can simply use their mouse to explore the data with drill downs, with nice little histograms above like column names.

It looks really intriguing even to let you look at kind of quality metrics of your data and, again, be able to have this interface built right away to do this. It's pretty fascinating. So apparently, there's also a Python package that accompany this called pygwalker that if you're on the Python side, you might want to look at as well, but that could, lower the barrier of entry quite a bit for those maybe at your respective organization. If you get some pushback from certain people, say, yeah. Shiny's cool, but it's just so hard to get something up and running quickly. Maybe g Walker could get you halfway there, especially for an EDA context. So I'll be paying attention to this more closely.

[00:45:14] Mike Thomas:

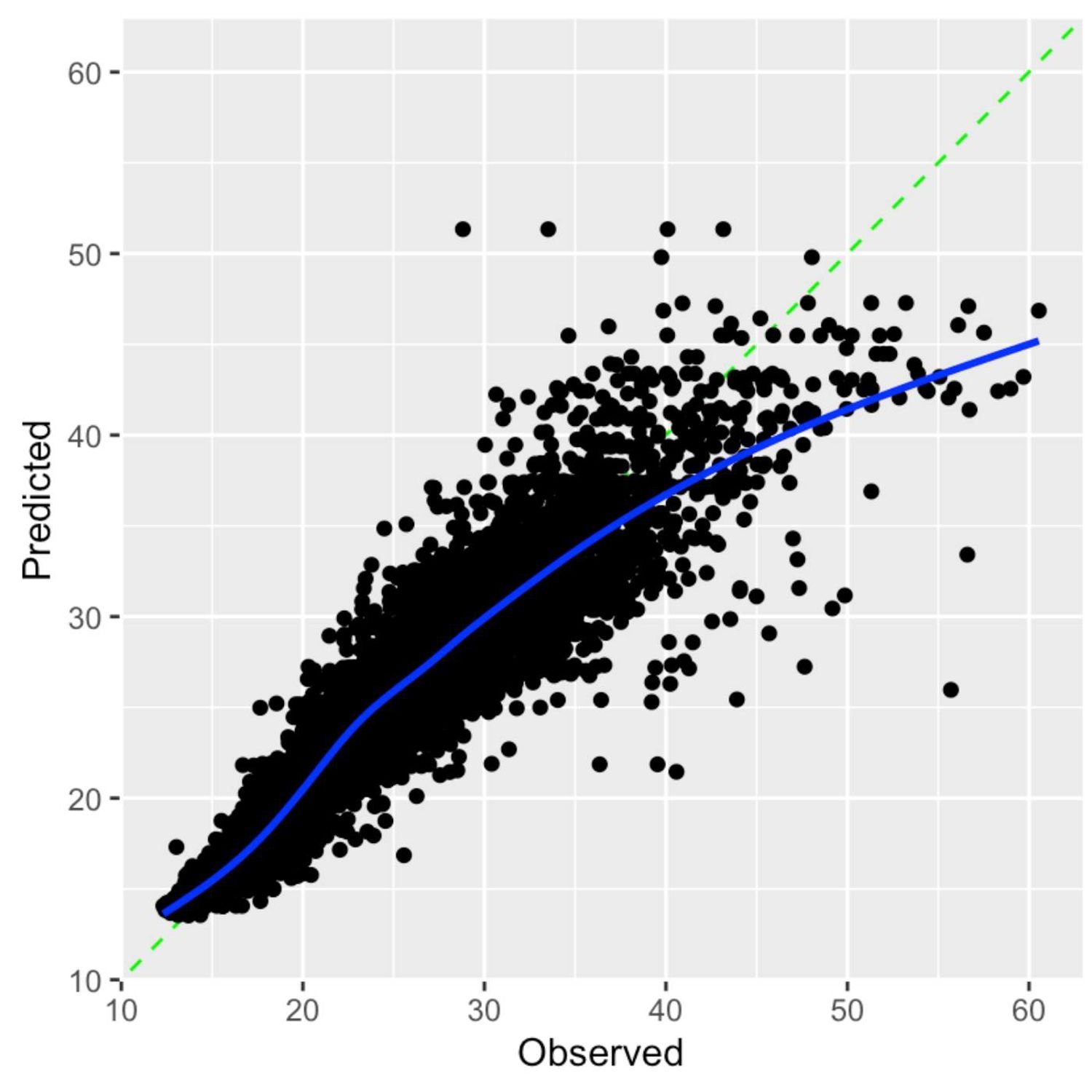

That's pretty interesting, Eric. Another one that I would check out that I think is kind of in that same vein is the Apache Superset project. Have you seen that? I have not. So it's open source, modern data exploration and visualization platform. It's like a dashboarding tool, kinda looks like Tableauy, allows you to write custom SQL if you want to as well. Pretty pretty incredible under, I think, that whole sort of Apache umbrella. But this GRarker package reminds me quite a bit of that. So this is this is very interesting as well. One thing that I found that I was really interested in is, this blog post titled post processing is coming to tidy models. It's a blog post from the tidy models team, Simon Couch, Hannah Frick, and Max Kuhn, essentially about sort of this new I think it's our package or function. Yes. It is in our package, called Taylor.

That is sort of like recipes except for at the end of your model in terms of developing the workflow at the beginning of your model. And what it does is it takes the results of your model and does some tuning, tailoring, if you will, post processing of those results to, you know, one example here that they use is, a situation where your model is predicting, you know, your your class probabilities of of 0.5 are going to 1 class, and and the ones that are less than 0.5 are going to the other binary class in your dependent variable. And if you look at maybe your model results, you know, most of the data is coming in on one side or the other. It's not sort of evenly distributed, on either side of that 0.5 threshold.

You know, a lot of times, we see this in situations where there's class imbalance in the dependent variable. And let's be honest. I mean, if you are out there working on machine learning problems all the time where you have great class balance, in your dependent variable, I think that you're in the minority because the rest of us in the real world, face this issue constantly, unfortunately, where it's it's hard to find, you know, that that one case where where things are going, bad, if you will. And I think probably that stems from hopefully, if you're running a business, you know, things are going well more often than they're going bad. So you probably have less observations in your data of things going poorly. But I'll save that tangent for another day. But one place I think that we've struggled a lot in machine learning in the past is trying to find the right approach to dealing with class imbalance.

You know, resampling can kind of conflate results, different ways. And I I think this post processing technique that might allow us to be able to better handle those types of situations. So there's great examples in here for both, you know, binary, dependent variables as well as, continuous dependent variables to be able to adjust, you know, sort of your your output and improve your root mean mean squared error and things like that. It's a fantastic blog post. I'm really excited about it. There's more to come here, but all of this this code looks like a really nice complement to what already exists in the tidy models ecosystem. It should feel pretty natural to folks who are comfortable with the recipes, our package, leveraging, you know, similarly named functions, if you will, just within this tailor package on the back end of your model. So something I'm I'm really, really excited about, and and really, thrilled to be able to to try to kick the tires on this as soon as possible.

[00:49:13] Eric Nantz:

Yeah. I I know post processing in general has been especially, like you said, class imbalance. I've never met a dataset with perfect balance in my wife yet, especially in my day job. So having a way to to interrogate this more carefully and getting these these summary metrics and visualizations. Yeah. This is this is very, very important, and I can I can tell that it looks like the team has taken their their their due diligence, so to speak, to build this in with a nice unified interface? So like the rest of the tiny models ecosystem, there should be something that's very, you know, quick to adopt in your workflows, and I'm really excited to see to put this in action. And so if our messy, predictions

[00:49:52] Mike Thomas:

that we've done in the past, for sure. We got survival modeling. Now we got post processing. I don't know what's next.

[00:49:59] Eric Nantz:

I don't know, man. That that Max over there and Julia, they they know how to crank them out every every year. There's always something new. So credit to them for, you know, blowing our minds almost every on a yearly basis. But, yeah. And we hopefully the rest of the our weekly content will give you some mind blowing moments as well. There's a lot more that we couldn't get into today, but definitely check out our sections on the new packages out there. I saw some great stuff related to life sciences that has landed as well as some other great tutorials out there, from familiar faces and new faces in the r community.

And how do we keep this project going? Well, a lot of it relies on all of you out there to send us your suggestions for great new content. The best way to do that, head to rweekly.org. Hit that little top right ribbon in the upper right corner to send us a poll request to the upcoming issue draft where our curator will be able to get that great new blog post, new package, tutorial, package update into our next issue. And, again, huge thanks to those in community that sent your poll request. They are much appreciated. It makes our our job a lot easier on the curator side to have that given to us. And, also, we love to have you involved as well in terms of listening to this show.

You can send us a fun little boost if you're listening to one of those modern podcast apps like Podverse Fountain or Cast O Matic. They're all great options to get you up and running with that functionality. And we have a nice little contact page directly in the show notes. I mean, it should be in the show notes if the AI bots don't remove it. We'll find out. But nonetheless, we also like to hear from you on social media as well. I am on Macedon these days with at our podcast at podcast index.social. I'm also on LinkedIn. Just search your search my name, so to speak, and you'll find me there. And occasionally on the Weapon X thing, I may not post very much, but I'll definitely reply to you if you shout at me. So, Mike, where can listeners get a hold of you? You can find me on mastodon@mike_thomas@phostodon.org,

[00:51:57] Mike Thomas:

or you can find me on LinkedIn by searching Ketchbrook Analytics, ketchbrook, to see what I'm up to lately.

[00:52:05] Eric Nantz:

Very good stuff. And, Mike's posts are authentic so this week. Sometimes on LinkedIn, you can see some of those, you might call, should've rated posts, but Mike's always Mike always keeps it real. Try to. Absolutely. So we're gonna close-up shop here for this edition of our weekly highlights, and we'll be back with another episode, at least I hope we will, of our weekly highlights next week.

Hello, friends. We're back up so 182 of the R Weekly Highlights podcast. This is the usually weekly show where we talk about the latest happenings that are highlighted in every single week's, our weekly issue. Now we were off last week because yours truly did have a a bit of a vacation he forgot about until after recording last week with my kids being off for fall break. But nonetheless, I am back here. My name is Eric Nanson, and as always, I am delighted that you join us wherever you are around the world. And, yeah, fall is in the air as, my co host can see I'm wearing my one of my our hoodies here because it is a little chilly here in Midwest. But, of course, I gotta bring him in now. I also my host, Mike Thomas. Mike, are you, experiencing the chill there too? It is a little bit chilly here, Eric. Not gonna lie. My office, for whatever reason, seems to be the coldest

[00:00:52] Mike Thomas:

room in the house. I don't have great zoneage set up here. So been trying to, put the sweatshirts on and off in between teams calls and get the space heater out, but it's 'tis the season.

[00:01:05] Eric Nantz:

Yeah. You know what's a great space heater? A really beefy server like the one right next to me. No. That's a good idea. Yeah. This one's not too bad, but if you go behind it, yeah, you can more up your hands hands a little bit. But, I I will mention in our week off, probably one of the funniest things I did was we had gone to a place here in Indiana about a bit south of where I met called Blue Spring Caverns, where basically you can go about 200 feet below the surface into literally this set of caves with a waterfall, and we had this fun little boat tour.

And as you get deeper in this, with the tour guy turning his his fancy light off, pitch black, like, you can't see anything. And then if you are completely silent, it is completely silent. Like, it is the closest I've ever gone to a place where I'm literally away from every single thing imaginable. But it it was a good time. You don't wanna be stuck there because you could you know, hypothermia could happen. Luckily, it was only about 40 minute tour, but, yeah, that was, that was kind of like a meditative type experience. So that that was a highlight for me. Very cool. Yep. So I made it back in one piece, and luckily, my kids didn't cause too much trouble or try to tip over boats or anything. So that was a win too. They they were not alone. There were other kids on that, but, of course and when the tour guide said, okay. Let's try this whole silence thing. In about 10 seconds, one of my kids just blurts out laughing. I'm like, gosh. Naturally. Yeah.

[00:02:38] Mike Thomas:

Surprised it lasted 10 seconds.

[00:02:41] Eric Nantz:

Yeah. Yeah. Me too. Yeah. Because I I could've been a lot worse. But, nonetheless, yeah, we had a lot of fun. Good time on the on the fall break, but I'm happy to be back with you. Tell him about the latest r w two issue. And, yeah, guess what? Just serendipitously, the timing worked out that I was a curator for this past issue. So that was a late night, Friday Saturday session to get this together. But, luckily, I think we got a awesome issue to talk about here, but I can never do this without the tremendous help from our fellow Rwicky team members and contributors like all of you around the role of your awesome poll requests and suggestions, which I was always very happy to merge into this week's issue. So first up in our highlights roundup today, we are talking about a pattern that as a package developer or an application developer, you definitely wanna get into the pattern of building unit tests in your package and and application because future you will thank you immensely for building that test to figure out any regressions in your code base, figuring out that new feature, making sure that you've got a good set of rules to follow for assessing its fluidity and quality and whatnot.

Well, as you can imagine, Mike, you can speak from experience on this too. As your package or application gets larger, start building more tests, more tests. Yes. Even more tests. This could be spread into, like, massive set of r scripts in your test that folder or whatnot. And you may be wondering, yeah, what's, what are some best practices for just keeping track of everything or keeping things organized? Well, I was delighted to see that, in our first highlight here, we have this terrific post by Roman Paul, who is a statistical software developer in the GSK vaccines division. On his blog, he talks about a neat concept called nested unit test with test that.

And this was something that I probably thought was possible. I never really tried it out. So let's break this down a little bit here. So as usual, he starts off with a nice example here with, in this case, a simple function for adding two numbers. Of course, that's one of the most basic ones, but that's not the point here. But as you develop, you know, thinking about what are the best tests for a function like this, you might come up with more than 1, like in the first part of the example here where he has a testing of whether the addition of 21+2 is indeed equal to 3.

But you may also want to build in tests for, like, error checking, such as detecting if an error occurs if one of the inputs like a and b are not numerics. And now you've built 2 tests back to back in your script. Now they they, in essence, even though they're separate script they're separate test that calls, they're still using the same function under the hood that they're actually testing. So naturally, what he goes do next is that you can actually bundle both of these tests inside an overall test, might call a wrapper test. He simply calls it ad. But within this, there is, a little trick that we'll get to in a little bit, but he's basically nested in those 2 separate test that calls for the baseline functionality and then testing those input values inside this overall test. Now there is a trick to do if you've tried this before and maybe gotten some, you know, warnings from test stat about, you know, a a, testing or an empty test.

He has, at the beginning of this overall block, a call to expect true whether a function exists, called add. And that that apparently will suppress any warnings about an empty test. That was new to me as a little trick there. So this this paradigm works great for, again, this 2 test case. But now in the rest of the posties talks about why would you wanna invest in this instead of just having all these as separate on top of just in the more cleaner organization. But there may be cases whereas you're building a larger code base of tests, you might want an easy way to skip your tests occasionally as you're iterating through development.

So in the next example, always added as a line after the expect true of skipping all the remaining tests below it with a a simple call to skip. So in that way, if you know you're breaking stuff and you don't wanna quite test again, and you just throw that skip in there, keep iterating, and take that skip out, and then it'll just go ahead and run those tests again just like it would before. And then there are some additional benefits you can have there but takes advantage of a couple different concepts that are familiar to an R developer. One of those is having a little shortcut, if you will, for that function that you're testing.

Maybe it has a long function name and you just wanna do a simple letter for it for the purposes of your test. So he gives this add function an assignment to the letter f, and then simply he's able to use that in all the expect equal or the expect error calls going forward. Again, that might be helpful if you have a pretty verbose function name. And then another one is taking advantage of much like in functions themselves, they have their own scoping rules where you might have then we might wanna reuse different parts of this test scope that may be more self contained for this group of tests, maybe not for remaining tests that are outside of this block, you can take advantage of having function having objects inside this overall wrapper, you know, test that that's going that's the outer layer. And then those can feed directly into those sub test stack calls much like you would with nested functions. That's another interesting approach if you wanna cleanly organize things about duplicating these objects in each test stack call of of objects that are related to each other.

Again, it will depend on your complexity to see just how far you you take this. But, yeah, the possibilities you you can go many different additional directions with this. And in the last example, he talks about how you can group the unit tests by maybe some additional criteria. Perhaps it's based on the type of input data. Maybe it's based on another factor. In this case, he's got ways of grouping it based on whatever he's testing for positive or negative numbers. Interesting functionality there that you can build and play. But in the end, the key part I take away from this is that the test that function is pretty open ended after all. Once you know kind of the the quirks to get around that empty warning, you could do some pretty interesting organization in terms of nesting related tests together, sharing objects between them, and overall having a slightly cleaner code base for your your what could be a massive amount of tests, which is I'm just releasing an app in production this week. I have a lot of business logic tests, so this may be something I have to look into for the future as well. So very night fit nice, fit for purpose post here, and I'll definitely take some learnings from this in my next, business logic testing adventures.

[00:10:27] Mike Thomas:

Yeah. Me as well, Eric. This is really interesting, and like you, it's not something that I had tried before. You know, what we're talking about here is a test that call inside another test that call. You know, not just having multiple expect type calls in the same test that block, which I'm I'm sure folks who have written unit tests before are familiar with, hopefully. Otherwise, your your, your code would be quite lengthy. And and one of the ways that I learned to to write unit tests was to look at the source code of some of my favorite r package developers, primarily Max Kuhn and Julia Silgy.

Because unit tests are are kind of interesting, you know, they're they're very different than a lot of the other R code that you'll write. And, they sort of execute in their own environment as well, which makes some of this stuff a little tricky. I learned this the hard way, you know, trying to download a file inside my testing script and handling, you know, the creation of a variable within a test that block that maybe doesn't exist in the next test that block. And as an r package developer, and I I know, Eric, you share a lot of these same sentiments, you can quickly become sort of obsessed with organization of your code, and I think testing is another place that that applies to.

And these concepts, I think, are really useful from an organization standpoint. I feel like I need to go go right back to some of the r packages that we have right now and take a look because I know that there are opportunities to improve, you know, leveraging what Roman's written about here, you know, particularly in some of the the lower code code blocks in this blog post, underneath local scoping where you're developing some variables within this outer test that call, that you're going to use in some of these inner test that calls that I believe will really just make, you know, some of the the code, run quicker and more lightweight instead of defining, like, global variables at the top of your script that are going to stay there for the remainder of all of the tests being executed.

These can kind of be local to just this particular outer test that chunk. So really interesting. I feel like it's kind of a tough blog post to do justice without actually taking a look at the code and reading through it, but it should click really quickly if you take the time. And it's a pretty short and sweet concise blog post here, to read through it. But, another takeaway for me was that I did not know about the skip function from test that, which skips all of the tests below the skip call in that same test that block. So it'll execute the ones above it, but, you know, the all of the ones below it within that same test that block, as far as I understand, will be skipped, which is is really interesting if you are in the the middle of development, you know, if something's failing and you you need to just sort of, make sure that you're able to lock down, what you understand and then, you know, re execute the the tests that are firing so that you can, you know, work on the bug fixes in a safe and efficient environment. So really, really helpful blog post here, some concepts that I had never honestly seen talked about before or used, that I think are really, really applicable to anybody who's developing in our package or maintaining in our package out there.

[00:13:51] Eric Nantz:

Yeah. That skip function was, underrated gem that I dived into a little bit before this only because of necessity. You may find yourself in a situation, we're gonna get in the weeds on this one, where for my app I'm releasing to production, I had a different set of tests that were more akin to operating in our internal environment and our Linux environment versus when I would run it in GitHub actions, that was technically a different environment. And so I had to skip certain things if it was running on GitHub actions that were more, I'm gonna be blunt here, very crude hacks to get around issues with our current infrastructure for the tests that were appropriate for internal use. So, yeah, the skip there are variants of the skip function as well. There's like a skip if CRAN type function, which is a wrapper that detects if this is running on the CRAN server or not. So it won't run those tests where maybe you wanna run them in development, but you don't care as much for the actual CRAN release of a package. Again, there would be different use cases for these. So, yeah, you're invited to check out the the test that vignettes. There's a lot of great documentation there. But this concept that's been covered here, has not been mentioned in the vignettes of test that before. So I'm really thankful to Roman for putting this together for us because I'm gonna bookmark it and test that related resources to use going forward.

Doesn't it seem like yesterday, Mike, when we were sitting near each other at deposit conf, listening to Joe Cheng's, very enlightening talk about

[00:15:40] Mike Thomas:

using AI chatbots and Shiny. Don't you remember, my friend? Oh, I remember it like it was yesterday, Eric. I was gripping onto the edge of my seat.

[00:15:49] Eric Nantz:

Yes. And I I teased you a little bit before that because I had an inside preview. But, nonetheless, I was still blown away when you actually see it in action. And while I hope the recordings will be out fairly soon, once they are out there, you'll wanna check it out, for you and the audience because Joe Chang demonstrated a very interesting Shiny application. At that time, he was using Shiny for Python that had a, in essence, a chat like interface on the left hand margin, and then it was visualizing through a few different, like, summary tables and visualizations, a restaurant, tipping dataset.

But when Joe would write an intelligent kind of question in the prompt saying, show me the the tip summaries for those that are males, Instead of being a shiny app developer trying to prospectively build all these filters in yourself, the the chatbot was smart enough to do the querying itself, and it rerendered the app on the fly. It was amazing. Many in the audience were amazed. Mike and I, of course, are looking at each other. It's like, yep. We wanna use this.

[00:16:59] Mike Thomas:

Yep. We've been skeptical about AI. Have some harsh feelings for it, at some points in time, but, yeah, we wanna use this. I agree.

[00:17:09] Eric Nantz:

So as I mentioned, that was using Shiny for Python, and he had mentioned that the R support was coming soon. Well, that soon is now, and you might call this an inside scoop, so to speak, because while we're talking about is public, it hasn't really been publicly announced yet by time deposit team, so you get to hear it here first. But there is a new package out there, by Joe Chang himself called shiny chat. This is basically giving you as a Shiny developer a way to build in in a module like fashion the chat user interface component inside your Shiny apps. And it indeed on the tin works as advertised where you can call a simple function to render this chat interface in very similar to, like, a chat UI call.

We have a little server size stuff we'll talk about shortly, and then your app will have a very familiar looking chat interface that you can type queries in and get results back. There are some interesting things under the hood here that respect the Shiny itself and giving you that that chat like experience that you see in the external services with some clever uses of synchronous and asynchronous processing under the hood. There is example in the in the GitHub for the package that you can take a look at as well. But there is there is another component to this, Mike, that ShinyChat on its own doesn't do all the heavy lifting much like, you know, robust Shiny development. We always say that for heavy lifting of, like, server processing or business logic, you wanna put that into its own dedicated set of functions or packages.

So hand in hand with shiny chat, we will put a link to this in the show notes, is a new package that's being authored by Hadley Wickham called Elmer. Elmer is in our package to interface directly with many of the external AI services that we've been using, for a while now, such as, of course, OpenAI. There's been now support for Google Gemini. There's support for Claude and support for all WAVA models if you've had those deployed on an API like service. So Elmer is actually the way that Shiny chat from the server side is going to communicate directly with this AI service, and this is where you feed in any customizations to the prompt before the interface is loaded, which is something I've been learning about, recently, prompt engineering.

So Elmer will give you a way to feed that in directly, And then you can also take advantage of, of course, the responses being returned to you, and you can do whatever you want with those. But the real nugget here, and, again, this is actually how that restaurant tipping app works under the hood, is in addition to the prompt being sent to this chat interface, you are able to select what are called tools for this. Now what is a tool in this case? We're not talking about, like, a, you know, tool in the traditional sense. You are letting the AI take advantage of, say, a function or maybe functions from another package to help assist you with taking the result from that initial query or or that message you send to the chatbot and maybe calling something on your behalf to help finish off the process.

So an example I can illustrate here is if you ask a chatbot, hey, how long ago was, say, the declaration of independence written? Guess what? Due to the stateless nature of these chatbots, it doesn't really know what time it is for you right now when you call that. It may try to guess that you're calling it in October or whatnot, but it's not gonna really know that answer. So how to get around this is you can help feed in via this tool argument in Elmer when you set up the chat interface, a function that it can call to help answer these kind of questions.

This opens up the possibilities to help the AI bot get answers to things that through its, you know, training that it's done externally or internally, depending on how you're using it, would not be able to answer on its own. This took this taken me a little bit to get used to, but that time example was one thing that kinda clicked. But that, in theory, is what's happening with this restaurant tipping app that we saw at Pazitconf where Joe built in a tool, I. E. A function to basically execute that what became a SQL query as a result from that question that we asked it. And then it would execute it on the source data that was fed into the app. It was set as a reactive doom. Every output is refreshed in real time after that query is executed.

And that is something that is amazing to me that now we can give the chatbot a little more flexibility. But just like if I was training, like, a a new colleague to get used to one of my tools or one of my packages, You've gotta be pretty smart with it or or I should say be helpful with it. We have a function. What do we talk about? Have good documentation. Have good documentation of the parameters, the expected outputs. That is going to be necessary for Elmer to take that that source of that function or that function call and then help assist you with the syntax you need to feed this in as a tool to the AI to the AI service.

So there is a lot to get used to here, but we'll put a link in the show notes to, again, the the restaurant tipping examples. You can see this in action. But now the combination of Shiny chat and Elmer, we can start building these functionalities into our Shiny apps that are written in r, and we are always scratching the surface here. This stuff I wanna stress is an active development. These are not on CRAN yet, so there could be features that are breaking changes or whatnot. So be wary of that. But they are very actively working on this as we speak, and I'm excited to see what the future holds. And my my creative wheels have already been turning about ways that I can leverage this at my in my day job as this gets

[00:23:47] Mike Thomas:

gets more mature. Yeah. Eric, I mean, you you summarized it very well. I think that presentation by Joe really blew a lot of us away. I think it, created a lot of opportunity, probably a lot of of work for us, right, to to be able to try to integrate these things, especially if any of our bosses saw that presentation, to try to integrate this type of functionality and user interface and, you know, capabilities into our Shiny apps to, you know, I I think not only reduce the number of filters maybe that we need to create, but also sort of allow this sandbox environment for users to play around with the data that we're displaying in our apps such that we don't necessarily have to worry about boiling the ocean for them anymore and, you know, being able to to handle every possible little edge case that anybody could ever want to see, before we just yell at them and tell them to learn how to write SQL against the database. Right? Not that I've ever been there before. Oh, never.

But it it it's very interesting how a lot of this tooling by posit seems to be, you know, sort of quietly taking place behind the scenes. But, obviously, you know, I think this Elmer package is doing a lot of the heavy lifting, and it has a great package down site if you haven't taken a look at it yet. And this tool calling Vignette is is a fantastic introduction to to sort of how that works and how we'll be able to I don't know if customize is is the right word, but really be able to nicely integrate these large language model capabilities into our our workflows, right, and not have as much of a disconnect as I would have imagined there to be at the beginning. So it it's pretty incredible that we we do have this tooling. You know, I think as as Joe said, there are a lot of considerations that you need to take around, you know, potential safety to ensure that these large language models aren't actually getting direct access to the data in your app and ideally just executing a a or the models are returning a a query maybe based on the schema of your data without ever actually seeing, you know, your data itself in the case of, you know, that tipping example where the the model is really mostly returning a SQL query, essentially, that you can leverage to execute against, you know, your underlying data source, which, in my opinion, you know, those are the situations unlike this, you know, this this time example is interesting.

But I think, you know, the the former that I was talking about are are probably the most useful, as in terms of low hanging fruit that I can see applications of these large language models into Shiny apps. So it's really interesting to see all of this start to come together. Obviously, we saw some of this on the Python side first and and really excited that, the ecosystem on the the R side is expanding, just as well. So lots to see here already. I can imagine only more to come. And you, I I believe, Eric, had the opportunity to participate in a little hackathon around this stuff. I haven't gotten my hands quite as dirty yet, but that is really, really, on the forefront of what we're we're trying to do,

[00:27:16] Eric Nantz:

at the day job here. So, hopefully, I'll be able to report back on on some of our findings pretty soon. Yeah. That was, very, very fortunate that I I got the invitation to see some of the preview versions of these interfaces, and, you know, I wouldn't turn that down for anything. And, Mike, though, as we talked in the outset, I have been resistant is kind of a strong word, but it has been very not not very tempting for me to adopt a lot of this yet in my daily work. But I think with the right right frame of mind in this and the right use cases, this can be a massive help. I think as long as we have a way, especially in my industry, to only let it access what it needs to access, but yet this tooling functionality gives us a way to then act as if maybe the AI did get us 80% of the way there, and then we would have to manually do the rest of the 20%.

We're basically giving them a way to get to that 20%, but still run it in our local environment to do it. It's not going back to them to run it. That that is or to get the results back itself, so to speak. It's acting on my behalf. That's a nuance I'm still kinda wrestling with. It's still something that seems a little magical to me, but I think what we'll have a link to is the r version of this side restaurant app example in the show notes as well. The key another key part of this along with the tools supplying the tool function is the prompt itself. So in the example we'll link to, Joe has put together a markdown document that is the prompt that's being sent to it. Basically, it's reading the entire line this markdown file where it's very verbose with the AI, you know, the AI bot about what it has access to, what is expected to do, what it should not do, and then making sure that it doesn't go outside those confines.

It's interesting to see just what the nuances would be when you try this on different back ends, so to speak. Because I have heard that, yeah, things like OpenAI, this works really great almost a 100% of the time. There may be other cases where a self hosted model isn't quite there yet, but that's just the nature of this business. Right? Some of these models are gonna be more advanced than others because of the how many billings of parameters that are used to train the things. So there's a lot of experimentation, I think, is necessary to figure out what is best for your particular use case, but you get the choice here. That was one of the things I was harping on when I first heard about this is I don't wanna be confined to just one model type. I wanna or one service. I wanna be able to supply that as the open source versions do get more advanced. So there's the responsibility aspect is still very much at play for what I'm looking at.

But the fact that now this is our disposal about me having to tap into and again, no no shade to our friends in Python, but I've heard that the langchain framework can be a bit hairy to get into, and Elmer is really trying to be that very friendly interface on top of all this about me having to engineer it all myself. So I'm super impressed that with about 4 hours of work I did in this hackathon to build an extension of this restaurant type example with podcast data, I was able to get to even about, like, 85% of the way there in that amount of time.