The latest updates to the rayverse bring new meaning to smoothing out the rough edges of your next 3-D visualization, the momentum of DuckDB continues with the MotherDuck data warehouse, and the role nanoparquet plays to bring the benefits of parquet to small data sets.

Episode Links

Episode Links

- This week's curator: Eric Nantz: @[email protected] (Mastodon) and @theRcast (X/Twitter)

- Sculpting the Moon in R: Subdivision Surfaces and Displacement Mapping

- Joining the flock from R: working with data on MotherDuck

- nanoparquet 0.3.0

- Entire issue available at rweekly.org/2024-W26

- Use the contact page at https://serve.podhome.fm/custompage/r-weekly-highlights/contact to send us your feedback

- R-Weekly Highlights on the Podcastindex.org - You can send a boost into the show directly in the Podcast Index. First, top-up with Alby, and then head over to the R-Weekly Highlights podcast entry on the index.

- A new way to think about value: https://value4value.info

- Get in touch with us on social media

- Eric Nantz: @[email protected] (Mastodon) and @theRcast (X/Twitter)

-

- Mike Thomas: @mike[email protected] (Mastodon) and @mikeketchbrook (X/Twitter)

- The Amazon Session - Ducktales - Gux - https://ocremix.org/remix/OCR00402

- Doomsday - Sonic & Knuckles - elzfernomusic - https://ocremix.org/remix/OCR02532

[00:00:03]

Eric Nantz:

Hello, friends. We're back at episode 170 of the our weekly highlights podcast. Gotta love those even numbers as we bump up the episode count here. My name is Eric Nance, and I'm delighted you joined us from wherever you are around the world listening on your favorite podcast player. This is the weekly show where we talk about the awesome resources that have been highlighted in this week's current, our weekly issue. And I'm joined at the virtual hip as always by my awesome co host, Mike Thomas. Mike, how are you doing today?

[00:00:32] Mike Thomas:

I'm doing well, Eric. I'm taking a look at the highlights this week. I see that there's there's no shiny highlights, so this couldn't have been curated by by you.

[00:00:43] Eric Nantz:

Oh, the plot thickens. Oh, yes. It was. It was my turn this week. Good. That's so sure. There is some good shiny stuff in there that we may touch on a bit later. But, yeah, it was my my turn on the docket. So as usual, I'm doing these curations in many different environments, Mike. You're gonna learn this as your, little one gets older. You gotta be opportunistic when the time comes. And I was curating this either at piano lessons or swim practices or whatnot, but it ended up getting curated somehow. So we got some fun, great post to talk about today. Never as short as a great content to draw upon.

But as we always say in these, in these episodes, the curators are just one piece of the puzzle. We, of course, had tremendous help from the fellow curator team on our weekly as well as all the contributors like you around the world who are writing this great content and sending your poll requests for suggestions and improvements. So we're going to lead off. We're going to get pretty technical here in a very have in many different domains that honestly you would least expect. But we're gonna talk we're gonna go to the moon a little bit here, Mike. Not quite literally, but we're gonna see how R with the suite of packages authored by none other than Tyler Morgan Paul, who is the architect of what we call the raverse suite of packages for 3 d and 2 d visualizations in R.

His blog post as part of this highlight here is talking about how to use r to produce not just images of the moon and other services, but very accurate looking images with some really novel techniques that I definitely did not know about until reading this post. So I'll give a little background here, but when you look at graphics rendering and you'll see this in all sorts of different domains, right, with, like, those CGI type movies or images or, you know, animations, of course, gaming visuals and whatnot that have to do with some form of polygons. Little did you know, little did I know at least, that the triangle geometric shape is like the foundational building blocks of many of these computer graphics, which gets you really long ways, but it's not as optimal when you have to render a surface that is on 2 different sides of a spectrum.

1st, maybe something that looks completely smooth. No artifacts. No, you know, jagged edges or whatnot. Or the converse of that, something that has unique contours, unique bumps, if you will, such as, say, the surface of the moon with its craters and other elevation changes. Now there are 2 primary methods that Tyra talks about here that can counteract kind of the phenomenon of these triangles not really being optimal for this rendering. One of these is called subdivision where, in essence, these polygons are subdivided further into micro polygons often smaller than a single pixel, which means we're blurry looking at something small here to help bring a smoother appearance with tweaking a certain parameters.

And then on dealing with the converse of that, again, like dealing with bumpy surfaces or contours, you have what's called displacement of the vertices of these associated polygon to help bring this kind of artificial detail to the surface that, again, mimics what you might see in the real world with these contoured surfaces. And Tyra says that the Raver suite of packages now have capabilities to handle both of these key algorithmic kind of explorations or rendering steps to make these more accurate looking visuals. And as I think about this, as we walk through this example here, I harken back.

Again, Eric's gonna date himself here to my retro gaming roots where I remember very distinctly. It was in the, you know, early nineties, maybe mid nineties that the local arcade started having these racing games that were not those, like, sprite filled racing games like the yesteryear, like Out Run or whatnot. I remember vividly Sega making a game called virtual racing, which was on the first times I had seen in an arcade really robust looking racing cars in 3 d type perspectives, but you could tell they were made with polygons. And they were smooth. Some were smooth, and yet some are really jagged as heck when you went out crashes or sparks, and you could kinda see if you, like, if you had a pause button in the arcade, you can see these little artifacts that appear that look like little geometric shapes.

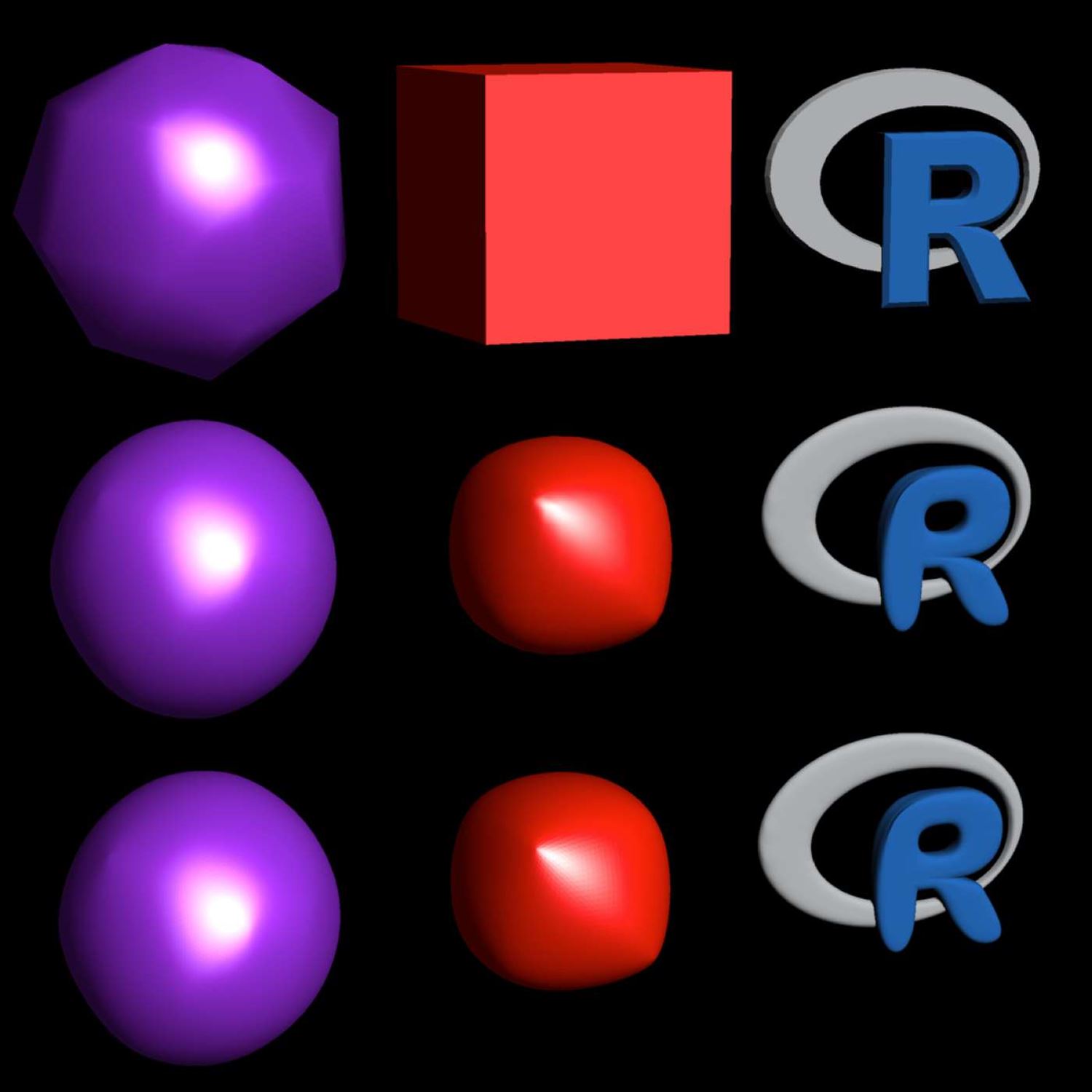

And in essence, that's what can happen when you render an image in the beginning, and he's got an example where he basically renders what looks to be, kind of like a cross between a sphere and a hexagon in a 3 d contour and then a, cube and then the r logo. But when you render this first of a few parameters that he does with one of his, packages, you can see, yeah, they're little polygon shapes. They look like what I would see in that kind of first attempt at that virtual racing game. But then he starts to show example code, again, new to the ravers of called subdivide_mesh, this function which lets you define just how deep you do this subdivision.

And he starts with levels of 2, and then he, you know, re renders the image. And now, in the second image, you look the left image. Again, you want to look at this as we're talking through it if you have access to the screen. That first, ball on the left looks like the Epcot center or a golf ball and, on on the golf course. You can tell. You can see those little triangles splitter throughout, which is not what he wants. He wants to make this look completely smooth. So guess what? It's all iterative in these steps. He tries another subdivide mesh call with, you know, first trying to make things look a little more smoother without going new subdivision levels, and it doesn't quite get the job done. They still look, you know, a little bit off, but he's rounded out some of the edges to that aforementioned cube, but he's still gotta go deeper. And, basically, he finds this sweet spot of, like, subdivision levels of 5, which means this is upping the count on these little small triangle shapes that are composed in each of these images.

And going from the start to finish of this, that added many, and I do mean many additional triangles to each of these shapes as compared to what he started with. It's a huge increase, and, in fact, it was a 1024 fold increase in these number of triangles, which may not be the best for your memory usage in your computer, although I there are no benchmarks around that. But I know from my, you know, brief explorations and things like, what's a blender for rendering, 3 d and Linux and other software, that as you make these shapes more complex, yeah, your computer is gonna be doing a lot more work with that. So in the end of this example, he's got 3 versions of the the rendered scene, what he started with, and then one in the middle, which is kind of like a compromise of like the subdivision levels and kind of moving some of these vertices around, and then the last one of using like all these triangles at once.

The good news is is that this shortcut approach in the middle, you can't really see a visual difference of the naked eye between that and the more complex version with the additional triangles. But the key point is that, a, Ray the Rayverse packages support this, and you're gonna have to experiment with it for your use case to get that sweet spot of the smoothness that you're looking for. So that was a great example how you want to give this kind of smooth appearance without those artifacts fault coming into play. Now, we turn our attention to the bumpier contour surfaces with, say, the moon as inspiration.

And he mentions that this will be familiar to anybody who deals with GIS type data in the past with elevations. He has a nice render of both the moon image as well as, moderate bay where you can see the contours very clearly in the black and white images, And then he starts by drawing a sphere of what looks like the moon, but when you look at it, it just looks like a very computer graphic looking moon with, like, a shade on the left and light on the right, but you would not tell that's anything really. So then comes the additional kind of experimentation of, okay, how do we first figure out what is the current displacement information that's in that image so you can get a sense on what's happening here and how many pixels are being sampled to help do this rendering by default.

So there are some handy functions that he creates to kind of get into this metadata. And he notices that in the first attempt, there's only about point 0 9% of the pixels from that moon image are being sampled to produce this more artificial image, and that's why it's not looking like a moon at all at that point. So gotta up the ante a little bit. Again, kind of like a iteration of this to figure out, okay, maybe I need to use more pixels to sample in this. It starts going from like 1.4% of them, Still not quite looking right as you look at the post. Ups it further, and he's got a handy function called generate moon mesh that he kinda ups the levels 1 by 1.

And eventually, he gets to a point where, hey, we're now we're getting to what looks like the craters on the image where about 5 or 6% of the pixels are being sampled. Still not quite there yet, but he ups the ante even more with this, levels. And then he finds what looks like, you know, a really nice looking moon where it ends up sampling about 89% of the pixels, which means that there is a lot going on behind the scenes in this image. And in fact, this would take up a lot of memory, And you can see there is kind of a there's a point of diminishing returns the more pixels you subsample here for this displacement. Not really a lot of change visually. So ends up, he finds a sweet spot between not going to the extreme of, like, 2,000,000 pixels resampled somewhere in between.

And hence, he's got a really nice visual at the end where you can see it it's getting getting much closer once he brings us back to the sphere shape. And there's a nice little phenomenon kind of towards the end where it looks like at each of the north and south poles of the moon, when you do it by default, it kind of looks like things are being sucked in like a vacuum. It's hard to explain. But then when he does the applying the contour more effectively, you see that it just looks like any other surface on the moon, nice little craters and whatnot.

As you can tell, I've been doing my best to explain this in an audio form, but what's really cool is that you can see kind of Tyler's thought process of when you have a problem he wants to solve with his raver suite of packages. These steps that he takes that go from start to finish, not too dissimilar to what I see many of the community do when they teach things like ggplot2. Start with your basic layer, start to customize layer by layer bit by bit until you get to that visual that you think is accurate given the data you're playing with. And in case a toddler's visual is here, to closely mimic what you might see if you put your telescope out there and look at the moon at night. So, again, a fascinating look to see the power of r in graphics rendering, which if he had told me this was possible 10, 15 years ago, I would have laughed in your face. But this is really opening the doors, I think, to many people making so many sophisticated images, not just in the 2 d plane but also in 3 d and really insightful walk through here. And you can tell when you read Tyra's writings just how much time and effort he's put into these packages to make all these capabilities possible. But yet with, you know, geological and very fit for purpose past.

So a lot to digest there, but, again, I think it's a very fascinating field.

[00:14:20] Mike Thomas:

Eric, I I couldn't agree more. I'm always fascinated by Tyler's work on this particular project. I think, you know, when the raverse sort of first came out, it was pretty revolutionary, I think, to the our ecosystem. You know, we had ggplot, and we could do, you know, a a lot in in ggplot and some of the other plotting packages that were available. But in terms of 3 d visualization, animations, things like that, you know, we never had anything like that as far as I know until Tyler came along. So these suite of packages in the in the Rayverse and in particular the ones that he's highlighting today and their new functionality are RayVertex and Ray render are pretty incredible.

I have tried to do some of this myself in the past. I think, I think you can expect your laptop fan to to a little bit as you try to do this. So get your GPUs ready, if you're especially if you're trying to add, you know, additional complexity and do some pretty in-depth things within these packages. But the the fact that we can do this at all is is absolutely incredible, and I loved the callback to sort of your early, you know, eighties nineties video game experience because that is that exactly what a lot of this blog post reminded me of as he leverages that subdivision levels argument in the subdivide mesh, function.

And you can sort of see how the the pixelation just starts to smooth out as that that number increases in terms of how many, subdivision levels are being used. And it's it's almost, you know, conceptually, like, following video game graphics from the eighties nineties to the 2000 as you sort of get away from these these triangles and the pixelation into something that looks, you know, much smoother, into the naked eye. You you really can't even see the pixelation at all. Great summary by you. I'm not sure if there's a whole lot more to add to it. It's an incredibly visual blog so it's difficult for us to do it spend a lot of time in, you know, 3 d visualization, I think you'll still find it fascinating.

[00:16:35] Eric Nantz:

Yeah. And it does remind me there is ripe opportunities that we often see shared on social media from time to time. They'll call it, like, accidental art when you're trying to do a neat plot, yet it doesn't come quite outright, but yet it's so interesting to look at. You wanna post it anyway. These things are ripe for accidental art, especially if yours truly is the one coding it up. But, I do think as you look at the evolution of these images, back to that racing example, it's like when I saw that virtual racing game back in the early nineties and, again, you could tell it was like Sega's, you know, first real attempt at making somewhat perform at Polygon renders.

And then it was like a couple years later, those of you who remember that Daytona USA racer game, it looked real. Like, everything was smooth. Everything was sharp. Everything is 60 frames per second. That is like you can have that same transformation as you play with these displacement, parameters and, again, the contour parameters. It's it's fascinating. It's absolutely fascinating. And what, who would imagine r could be an engine for graphics like this? And I've been following on social media kinda the other side of the spectrum where it's a slight tangent here, but Mike FC AKA Cool But Useless have been making all these packages that help me make sprite rendering even more easier in r itself.

And it's like, my goodness. We can make almost any type of game in r now. It's just a matter of time, really.

[00:18:08] Mike Thomas:

Absolutely. It's it's incredible stuff. Incredible stuff. And I do remember Daytona USA very fondly. How much fun was that?

[00:18:16] Eric Nantz:

Oh, lots of quarters of spending that one, and then, of course, the computer racers have just eat my lunch. But that was part of the design to get your money back in those days. So we're going to shift gears quite a bit here, to get into more of the data side of things because there are some really promising developments in some of the ecosystems that Mike and I have been speaking really highly about in recent episodes of our weekly highlights. And in our next highlight here, we're gonna go back to, the now getting a lot of traction, so to speak, the DuckDb paradigm, the DuckDb database that just got supercharged with a new plugin and a new capability of using a service that's new to us from the our side of things.

So and it happens to be called Mother Duck, and that's an awesome name. But, Mike, what is this all about?

[00:19:17] Mike Thomas:

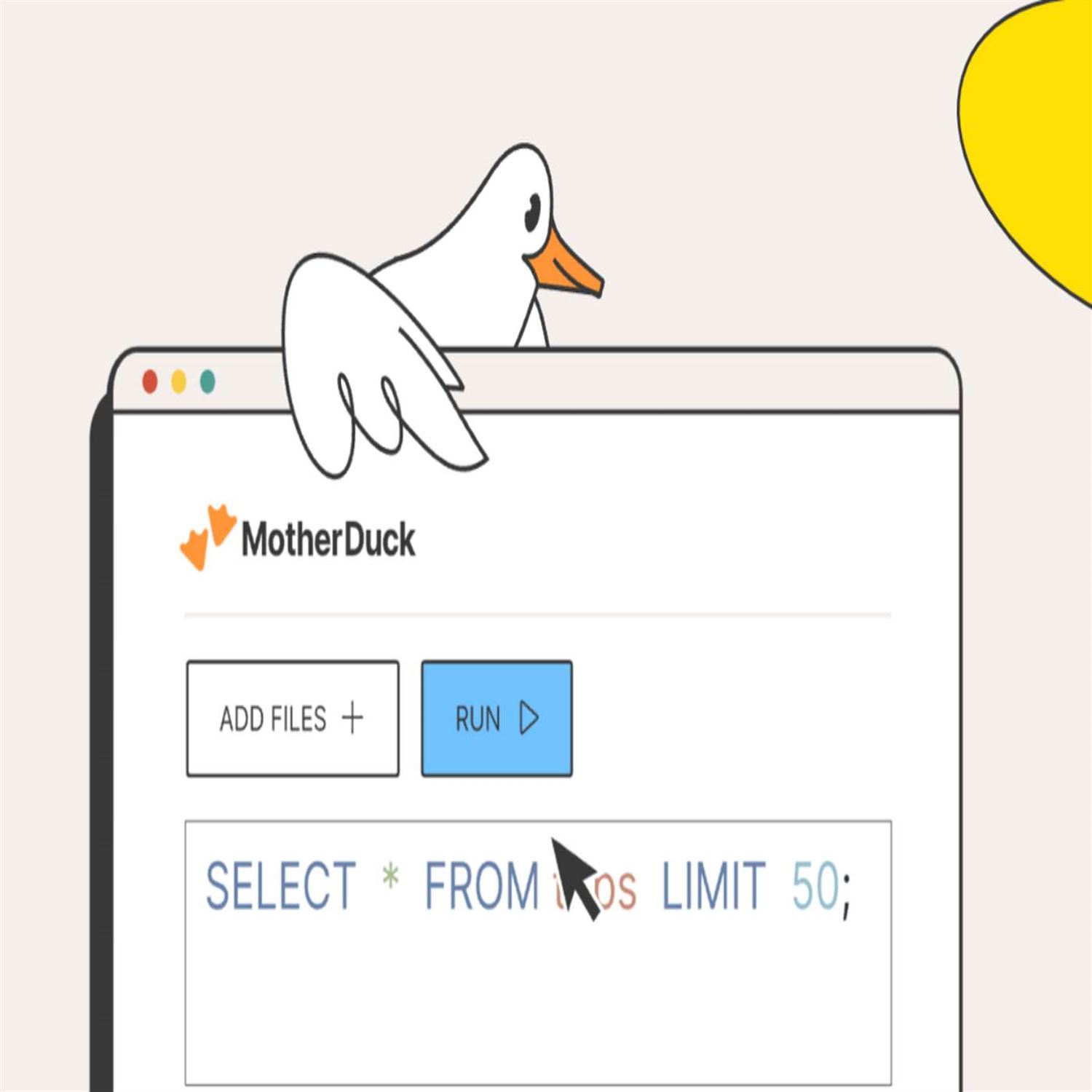

So Mother Duck is a way to run as far as I know. It's sort of a whole cloud based data warehouse system, or platform, from the developers of DuckDb themselves that allow you to not only store data, in the cloud but to also run DuckDV itself in the cloud so that you don't need DuckDV installed on your own laptop. You just need to be able to authenticate into your Mother Duck account that you have, and you can run, you know, serverless cloud based analytics using DuckDb, but not needing anything installed on your own laptop which is is really cool besides, you know, the the packages to authenticate into it. So currently, and and, you know, one big component of this that that's noteworthy that I think we should touch on is DuckDV just released version 1.0.0 on June 3rd.

So this is incredibly exciting, you know, for the folks that have been following this project, for the folks that have been working on this project that we have a first sort of major, release of the project. And it's, you know, I think it's a a framework that we've seen, play out a few times, you know, in the data science ecosystem where a team will develop an amazing open source, framework or functionality. I think we've seen this, with the DBT package. If you're if you're, familiar with DBT software that I think allows you to do, more versionable ETL, They also have, I believe, a paid offering of that as well. I know that the team at Pymc sort of has some similar things going on, and it looks like the DuckDV team obviously has this incredibly powerful open source software that we can now use for doing some pretty incredibly efficient, ECL and and SQL querying on large datasets in, like, no time at all. But they've also built out, obviously, a, sort of privatized side of the business that allows them to to hopefully make money and to continue to, enhance DuckDV itself and that's this Mother Duck offering that we're talking about here. So there's pretty good documentation on, how to connect to your mother duck, service from Python but it looks like there's not necessarily any documentation out there yet on doing this in R.

Although the author of this blog post, Novikha Nakhov, notes that it's it should be pretty straightforward to do so, if you follow sort of the the Python framework and just adopt it leveraging, you know, what we know about how to connect to DuckDV databases using r. So that's what, Novica is doing in this blog post. He is outlining how you would go about connecting to a mother duck instance from our, leveraging our 441, on Linux with DuckDB version 1.0.0. It's pretty easy to connect, to, you know, a DuckDb database and in memory database and he has a nice little code snippet of how to do this, how to populate that database using a data frame, and then how to query it using SQL syntax. And, it also notes that we do have the new duckplier package that would allow you to do this in a more dplyr like approach as opposed to having to write a sequel string in your db get query argument. But for the purposes of this blog post we're not going to explore Duckplier.

So I Novica details the Python approach first to connecting to to mother duck, and really it's pretty simple. And this Mother Duck Extension actually comes native to the Python duckdb package. And it's just a little one liner essentially that allows you to connect to your mother duck instance. You'll automatically get a notification in the terminal telling you what URL you should enter in your browser to be able to authenticate to your mother duck service, and then you're essentially good to go from there. In R, it's a little little trickier. The syntax looks pretty similar. It's a a one liner to try to connect to your your mother duck instance.

But one of the first things that you have to do is actually from the terminal, I think write a little bit of code, to create a local database that's called md or shorthand for for mother duck. So the reason that you have to do that at nova deposits is that, the duck dp package for r doesn't automatically figure out that it needs to load this additional mother duck extension the way the Python package does. So you have to sort of do that yourself but, again, Novica has all the code for us to do that here and it's it's fairly straight forward. You have to run a DB execute command to load this mother duck extension and then you're pretty much good from there, to be able to connect your mother duck instance and write your SQL queries that are going to execute in the cloud as opposed to locally.

So it's a a fairly, you know, straightforward blog post. It's really really helpful, I think, for those of us that are exploring DuckDB and want to try out Mother Duck. I will note that there's a free tier of the mother duck offering that gives you I think 10 gigabytes of storage, up to 10 compute unit hours per month which is probably a lot, you know. I'm not sure, you know, we know how fast DuckDV queries execute. That's right. Most of my DuckDV queries even on parquet files, you know, that are gigabyte, 1 or 2 gigabytes in size will run-in maybe a second or 2, and there's no credit card required for this free offering.

So there's no reason to not try out this mother duck service if it's something you're interested in. And if you need to go up to, the the paid tier, it's $25 a month per organization, I guess not per user, which is pretty incredible. If you're an organization that wants to leverage this mother duck service, that gives you a a 100 gigabytes of storage and a 100 compute unit hours per month, which I, you know, unless you're doing some pretty crazy stuff, I can't imagine that you would you would hit that. So I think it's a very sort of generous price point that we have here, and, obviously, it's amazing that we have this free tier that allows you to to try it out first, if you if you want to and and maybe that's all you need as well. So pretty incredible. Great walk through by NovaCon on how to use this in R because up until now, I don't think there's been any documentation on how to connect to, the mother duck service from our and it seems like there there was a little tricky hack that he pointed out. That's definitely going to help. Those of us who wanna try this out ourselves, probably save us a lot of hours. So we're very grateful that he went down that rabbit

[00:25:57] Eric Nantz:

That's for sure. Ever since 1.0 was released. And I do recall, that's for sure, ever since 1.0 was released. And I do recall having to do some extension installation in my explorations a few weeks ago where I had to install a couple extensions, one of which was to help interactive object storage in AWS that didn't come by default. But, yeah, it's a similar syntax with what, Novica outlines here in this post. And, yeah, I guess, I'm interested in exploring mother duck to just see, you know, on a on a very basic basis just kinda how it plays in my workflows. One thing I'm immediately thinking of, Mike, is that if you're an organization or you're a smaller or big company and you wanna leverage DuckDV as your database back end for things like shiny apps or whatnot. It sure does seem like having that hosted in a data warehouse would be an intriguing idea to help offset some of that storage footprint from you to that to that vendor. Of course, we were remarking in the preshow that, you know, you you wanna at least have, like, a couple, you know, plans, if you will, of where you host these files because, you never know what can happen on hashtag just saying.

But in any event, what's nice about this service, I think they have some examples I was perusing yesterday where they did hook up a couple I don't know. It was either observable notebooks or some other custom web apps with, say, the New York City taxi data and doing simple queries on that and some drop downs. And it was, as you said, blazing fast with these queries to get the results back to the browser and not making your browser do all the data munging itself. It's gonna, know, do that on on the server side. And it does look like they're also exploring interactions with, wait for it, WebAssembly too. So there's gonna be lots of interesting, I think, applications to play here. And, again, I'm intrigued to see to see where this goes in the future.

[00:27:59] Mike Thomas:

Absolutely. Me too. It's, an exciting space to watch. I've only dipped my toe in it. One really cool thing about the RStudio IDE is if you do have a dot SQL file in your RStudio IDE, every time that you save that file if you have a more recent version of RStudio, it'll re execute the query for you and show it up in in sort of the query output pane of our studio and that works great in duck DB as well I was playing around with it against parquet file, just editing my my DuckDV query and seeing the results come out real time without even having to, like, highlight the code and and click run or anything like that. It's pretty incredible.

[00:28:40] Eric Nantz:

All these save so much time when you add it all together. That is for sure. Did you say parquet files, Mike? I did, Eric. You sure did, man. That's a perfect segue to our last highlight today because, honestly, one of the building blocks that we have been, you know, espousing the praises of in many recent episodes is parquet as kind of this next generation data storage format. And, certainly, when you hear us talk about a lot of the use cases are when you have a collection of large data that you can do pretty, you know, sophisticated group processing with. So you can get really fast and and real time kind of analyses, being executed on it. But that's not the only use case because our last highlight here is, awesome update to the nano parquetr package, created by Gabor Society, a software engineer at posit. And his latest blog post talks about some of the key updates to this package and some of its use cases. So, Mike, why don't you walk us through what's new here?

[00:30:01] Mike Thomas:

I'd be happy to, Eric. You know that I am, want to sing the praises of the parquet format and everything around it. So very excited about this Nano Parquet. Our package and the 0.3.0 release, that Gabor worked on, I believe, Hadley may have worked on it as well and some others, on the the Tidyverse team at Posit. So one of the reasons that they created the Nano Parquet package is that, they wanted to be able to, you know, interop with Parquet files without necessarily needing, some of the the weight that comes along with like the arrow package because the arrow package does have a fair amount of dependencies on it and it can be a little heavy to install.

So one of the things about this nanoparquet package is that it has no package or system dependencies at all other than a c plus plus 11 compiler. It compiles in about 30 seconds into an R package that is less than a megabyte in size. It allows you to read multiple parquet files which is in a traditional sort of data lake, structure very very common, with a single function, you know, sort of like what we have in the reader package. I I don't know if this is little known or if it's well known but if you just point that to a directory instead of a file it will read in all of the files in that directory assuming that they have the same schema, and essentially append the data together into a big data frame. And that's the same thing that the nanoparquets readparquet function will do for us. It also supports writing most our data frames to, Parquet files and has a pretty solid set of type mapping rules between our data types and the data types that get embedded as metadata in a parquet file, which is, you know, again, another reason why, you know, parquet format exists and I think was another part of the motivation for this nano parquet package that it gets a little bit of a reputation, the parquet format, for only being for large datasets.

Like, you only wanna use parquet file format when your your data is big. And, I think what Gabor is trying to argue here in this blog post and that I would agree with is that that's not necessarily, you know, the case. And I think that anyone can make a case for leveraging the parquet for file format for storing data of of any size. And one of the, I think, big reasons for that is, the, you know, ability to not only have the data in the file but also metadata around the data including the data types such that when you hand that parquet file off to to someone else, they don't have to in their code the the way we used to do when we read a CSV and and define what the data type for each column that's coming in. You don't have to do that because it's embedded in the file itself. So we have a lot more safety around, you know reading data frames and writing data frames and ensuring, portability, you know, not only between languages but between users essentially so that, you know, what you see is what you get.

So I I think that's really helpful. You know, we know that Parquet is performant and typically a smaller size than, you know, what you would store in a traditional delimited type of file, which is is helpful as well. And, you know, there's additional things like concurrency, a parquet file can be divided into to row groups so that parquet readers can can read, uncompress, and decode row groups in parallel parallel as Gabor points out. So just a lot of I think benefits for leveraging the parquet file format and I think this is maybe one of the first blogs that I've seen make the case for the parquet for file format for small data as opposed to big data. So I'm super excited about the nanoparquet, package because I think that there are some particular use cases, you know, potentially Shiny apps that I'm developing that are maybe reading a a smaller dataset where I don't necessarily need to, leverage the whole Arrow suite of tools and maybe I just need to to read that data set in, you know, one time, from a parquet file, and the nano parquet package might be all I need.

[00:34:23] Eric Nantz:

Yeah. I'm I'm with you. I'm so glad they give, you know, the spotlight not just to the the typical massive, you know, gigabyte gigabyte datasets. I think what Nanoparque is doing here, again, great for the small dataset, situation, but I really like these metadata functions that are put in here too because I think nano parquet could be a great tool for you, especially being new to parquet, getting to know the ins and outs of these files, knowing, like you said, the translation between the r object types and the parquet object types in the dataset. There are lots of handy handy functions here that maybe they are part of the arrow package. I just didn't realize it, but to be able to quickly have these simple functions that grab, say, the schema associated with parquet, grabbing the metadata directly in a very detailed printout of, you know, a nice tidy data frame of these different attributes. I think this is a great way to learn just what are the ins and outs of this really powerful file type. And, of course, Gabor is quick to mention some limitations for this. He does say he probably wouldn't recommend using this to write large parquet files because this has only been optimized for single threaded processing.

But with that in place, I still think this is an excellent way to kind of dive a little bit or really deep into what are the ins and outs of parquet. And then when you're ready for that transition, say, to a larger datasets, he obviously at at the conclusion of his post calls the Arrow project out as well as DuckDV out. So you've got it all it all leads somewhere. Right? You may your your situation may be that Nanoparquet is gonna fit your needs, and that's awesome. More power to you. And then as you escalate your data sizes, then you can still use par k. You're just maybe transitioning to a different package to import those in or the write those out. So I think it's it's a great introduction piece as well as I'll be honest. I'm putting on my life sciences hat for a second.

I would absolutely love to use parquet format as opposed to a certain format that's in that ends in 7 b that. You know what I'm talking about, those out there. This would be a game changer in that space.

[00:36:50] Mike Thomas:

I mean, the word parquet just sounds a lot smoother than, you know, SaaS 7 beat at. It it sort of sounds like, I don't know, r two d two or or I don't know. You know? Well, at least r two d two out of personality. Oh, goodness. Let's switch quickly and one acknowledgment, that they that Gabor makes in this blog post that I do wanna highlight just for the sake of talking about how small of a world it is out there in the data science data engineering community is nanoparquet is actually a fork of an R package that was being worked on called mini parquet, and the author of mini parquet that r package was Hans Nielsen, who is the founder of DuckDV.

[00:37:35] Eric Nantz:

Well, well, well, it all comes full circle, doesn't it? Oh, this is what a fascinating time. Awesome awesome call out. And I believe Hans will be keynoting at positconf this year, if I'm not mistaken.

[00:37:49] Mike Thomas:

You are correct. Yes. I As well as like every other data science conference, I think, this year.

[00:37:56] Eric Nantz:

He's been a busy man for sure.

[00:37:58] Mike Thomas:

Deservedly so.

[00:37:59] Eric Nantz:

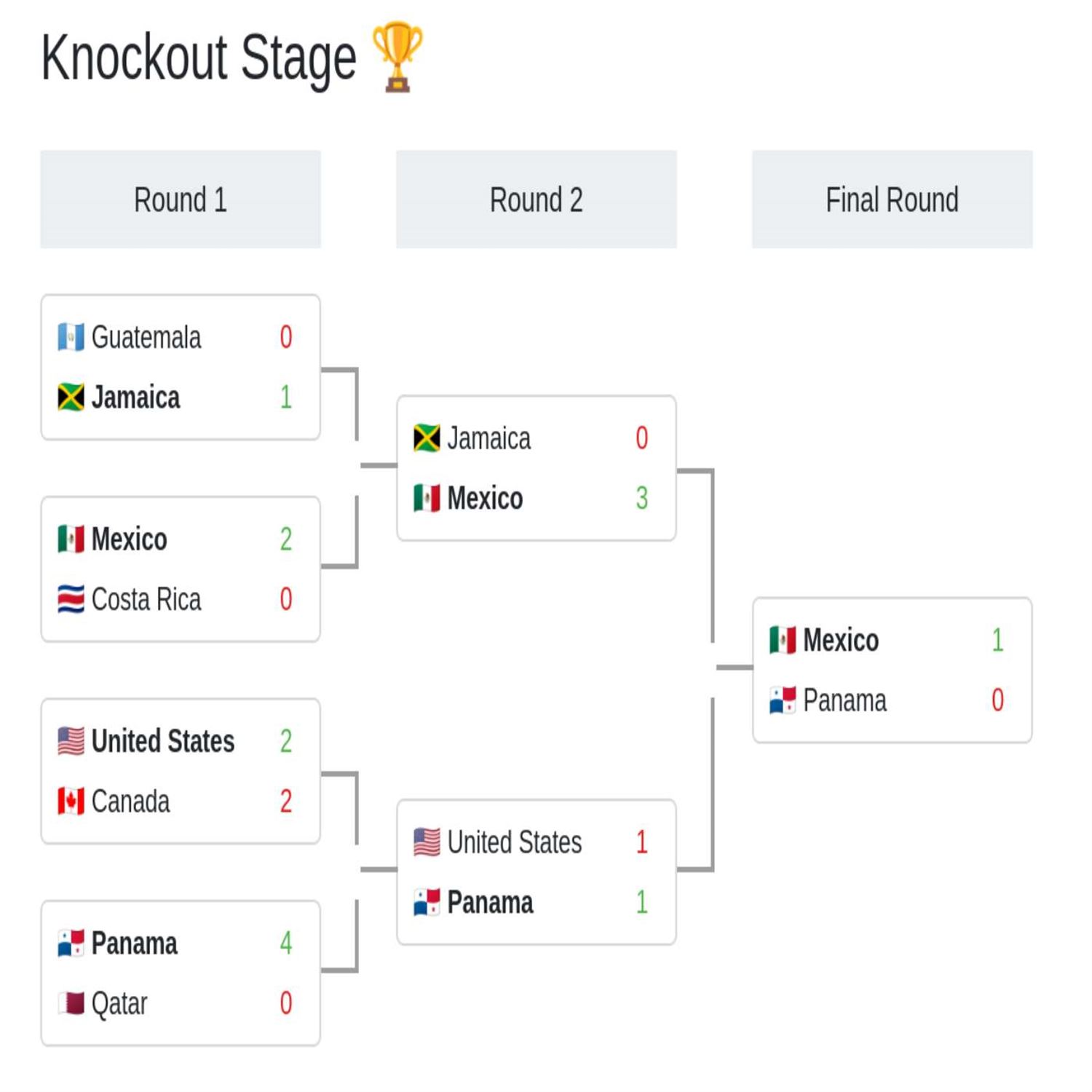

Yeah. Lots of momentum here. And, again, it's great to see every every all these frameworks and are now being a great beneficiary to this amazing work and giving us the tools, multiple tools to interact with these these systems. However, like you said, we have multiple packages. They're not with DuckDV itself, not multiple packages that interact with parquet. You've got lots of choice here. And, yeah, the time is ripe as it does seem like we're we're moving on to, like, these newer newer formats, these newer database formats that the the sky is indeed the limit here. And, yeah, thanks for riding that ship. I was about to go on more rants about another format, but we're gonna keep it positive here because this issue has much more than just what we talked about here in the three highlights here. I had lots of fun looking at the new packages, the updated packages, and much more. So we'll take a couple of minutes for our additional fines here. And, yeah, going back to the, sports theme for a second, now that we have a lot of interesting tournaments going on right now in the world of soccer and football. You might wanna visualize some of those, you know, head to head matchups, and I've got the package just for you for that one. A new package called brackets offered by Vera and Limpopo, who you might familiar be familiar with. She's been a frequent contributor to the shiny community and many talks and presentations.

But brackets is an HTML widget that lets you very quickly visualize the bracket of a tournament. And I could see lots of use cases in the world of sports analytics for darn sure, but the ever fascinating part of this package is that it was actually built during a course that Virla teaches about supercharging your HTML widgets, which is part of the analytics, platform that she recently joined alongside David Grandgen. So they've been both, hard at work on some of these courses about Shiny and HTML widgets. And, yeah, Brackets is one of the products of those courses. But, yeah, when I get back to doing more sports analytics in the future, I think I'm gonna put Brackets in my Shiny app just for fun alone, but I could see lots of lots of cool utility with that.

[00:40:20] Mike Thomas:

That's a great call out, Eric. And when I saw that, really, I guess, clean way to visualize, you know, tournament brackets, I was I don't know. I thought it was super cool too. So I'm I'm very glad that you shouted it out. I am not surprised. I did not realize that Virla is the one who put that together, and I'm excited to see more from that, especially, as we continue to dive deeper into a couple tournaments in my favorite sport, soccer or football for the rest of the world. A lot of bracketology going on these days so looking forward to more in that, area. One call out, that I wanna make is a Frank Harrell's blog, Statistical Thinking, has a new slide deck, that he gave as a presentation at the International Chinese Statistical Association Applied Statistics Symposium in Nashville, recently, and the talk is titled Ordinal State Transition Models as a Unifying Risk Prediction Framework, and he presents a a case for the use of discrete time mark of ordinal longitudinal state transition models.

If if we can pack all of those words into the same sentence and really the purpose there is is estimate estimating risk, and expected time in a given state, and and it's really, you know, in the vein of clinical trials in that area. But I took a look at these slide decks and, state transition matrices and models are something that I deal a ton with in, banking and financial services because most, loans banks have a particular rating assigned to that loan based upon, how risky it is to the bank, you know, the likelihood of that loan going bad, essentially, or likelihood of re repayment or or the borrower not repaying it. And, you know, they continue to to reevaluate that state that that loan is in.

You know, it could be monthly, it could be quarterly, it could be at some longitudinal time horizon. And then we want to take a look at how the volume within that loan portfolio shifted from one state to another, across a given time period. So I have in our package out there called migrate that is due for some updates, or due for a re release on crayon. We've had some work done on it recently but I just need to push it over the hump. So, I would check out these slides if you're interested in this type of stuff but mostly I'm calling, this slide deck out as a call to action for myself to get some work done on the migrate package and hold myself to it, out in the public here.

[00:42:57] Eric Nantz:

Hey. Our weekly has many benefits. Right? Making you do more work. That's awesome.

[00:43:03] Mike Thomas:

Yes. Right. But you're a great set of slides by Frank.

[00:43:06] Eric Nantz:

Yeah. Frank did a terrific job here, and and you you tell us a small world. I remember a lot of the the, you know, the mathematical notation and the concepts here because my dissertation very much had a component of theory around state transitions and competing risk methodology and whatnot. So I'm getting flashbacks of writing that chapter in my dissertation. It was not easy back in those days. But Frank has is one of the great thought leaders in this space, and, yeah, it's great to see him sharing this knowledge in many, many different formats and many venues. And there's so much more knowledge you can gain from the our weekly issue. Again, we could talk about it all day, but, yeah, I wish we had that time. But we're gonna invite you to check out the rest of the issue. Where is it you ask? Oh, you know where it is. It's atoroakley.org.

It's right here on the on the index page, current issue, as well as the archived all previous issues if you wanna look back to what we talked about in previous episodes. And, also, this is a community project. Again, no big overload company, you know, funneling down our vision. This is all built by the community, but we rely on your contributions. And, honestly, the easiest way to do that is to send a poll request to us with that great new package, blog post, presentation, resource, call to action. You name it, we got a section for you in the RN Week issue all marked down all the time. Just send us a pro request, and we'll curator of that week will get it into the next issue.

And, also, we wanna make sure we hear from you as well. We, of course, love hearing from you. We've had some great shout outs recently, and, hopefully, you keep that coming. The best way to get a hold of us is a very few ways. We got a contact page linked in the episode show notes. You can directly send us a message there. You can also find us on these social medias where I am mostly on Mastodon these days with at our podcast at podcast index dot social. I am also sporacling on x, Twitter, whatever you wanna call it with at the r cast and on LinkedIn. Just search for my name, and you will find me there. And, Mike, where can the listeners get a hold of you? Sure. You can find me on mastodon@[email protected],

[00:45:20] Mike Thomas:

or you can check me out, to what I'm up to on LinkedIn if you search for Catchbrook Analytics, ketchb r o o k.

[00:45:30] Eric Nantz:

Very nice. So we're very much looking forward to that new package release when you when you get that out the door. Hopefully, that'll be, top of the conversation in a future episode. But, yeah. I think we've we've, talked ourselves out. We're gonna close-up shop here with this edition of our weekly highlights. And, hopefully, we'll be back at our usual time. We may or may not because this is a time of year where vacations are happening, but one way or another, we'll let you know.

Hello, friends. We're back at episode 170 of the our weekly highlights podcast. Gotta love those even numbers as we bump up the episode count here. My name is Eric Nance, and I'm delighted you joined us from wherever you are around the world listening on your favorite podcast player. This is the weekly show where we talk about the awesome resources that have been highlighted in this week's current, our weekly issue. And I'm joined at the virtual hip as always by my awesome co host, Mike Thomas. Mike, how are you doing today?

[00:00:32] Mike Thomas:

I'm doing well, Eric. I'm taking a look at the highlights this week. I see that there's there's no shiny highlights, so this couldn't have been curated by by you.

[00:00:43] Eric Nantz:

Oh, the plot thickens. Oh, yes. It was. It was my turn this week. Good. That's so sure. There is some good shiny stuff in there that we may touch on a bit later. But, yeah, it was my my turn on the docket. So as usual, I'm doing these curations in many different environments, Mike. You're gonna learn this as your, little one gets older. You gotta be opportunistic when the time comes. And I was curating this either at piano lessons or swim practices or whatnot, but it ended up getting curated somehow. So we got some fun, great post to talk about today. Never as short as a great content to draw upon.

But as we always say in these, in these episodes, the curators are just one piece of the puzzle. We, of course, had tremendous help from the fellow curator team on our weekly as well as all the contributors like you around the world who are writing this great content and sending your poll requests for suggestions and improvements. So we're going to lead off. We're going to get pretty technical here in a very have in many different domains that honestly you would least expect. But we're gonna talk we're gonna go to the moon a little bit here, Mike. Not quite literally, but we're gonna see how R with the suite of packages authored by none other than Tyler Morgan Paul, who is the architect of what we call the raverse suite of packages for 3 d and 2 d visualizations in R.

His blog post as part of this highlight here is talking about how to use r to produce not just images of the moon and other services, but very accurate looking images with some really novel techniques that I definitely did not know about until reading this post. So I'll give a little background here, but when you look at graphics rendering and you'll see this in all sorts of different domains, right, with, like, those CGI type movies or images or, you know, animations, of course, gaming visuals and whatnot that have to do with some form of polygons. Little did you know, little did I know at least, that the triangle geometric shape is like the foundational building blocks of many of these computer graphics, which gets you really long ways, but it's not as optimal when you have to render a surface that is on 2 different sides of a spectrum.

1st, maybe something that looks completely smooth. No artifacts. No, you know, jagged edges or whatnot. Or the converse of that, something that has unique contours, unique bumps, if you will, such as, say, the surface of the moon with its craters and other elevation changes. Now there are 2 primary methods that Tyra talks about here that can counteract kind of the phenomenon of these triangles not really being optimal for this rendering. One of these is called subdivision where, in essence, these polygons are subdivided further into micro polygons often smaller than a single pixel, which means we're blurry looking at something small here to help bring a smoother appearance with tweaking a certain parameters.

And then on dealing with the converse of that, again, like dealing with bumpy surfaces or contours, you have what's called displacement of the vertices of these associated polygon to help bring this kind of artificial detail to the surface that, again, mimics what you might see in the real world with these contoured surfaces. And Tyra says that the Raver suite of packages now have capabilities to handle both of these key algorithmic kind of explorations or rendering steps to make these more accurate looking visuals. And as I think about this, as we walk through this example here, I harken back.

Again, Eric's gonna date himself here to my retro gaming roots where I remember very distinctly. It was in the, you know, early nineties, maybe mid nineties that the local arcade started having these racing games that were not those, like, sprite filled racing games like the yesteryear, like Out Run or whatnot. I remember vividly Sega making a game called virtual racing, which was on the first times I had seen in an arcade really robust looking racing cars in 3 d type perspectives, but you could tell they were made with polygons. And they were smooth. Some were smooth, and yet some are really jagged as heck when you went out crashes or sparks, and you could kinda see if you, like, if you had a pause button in the arcade, you can see these little artifacts that appear that look like little geometric shapes.

And in essence, that's what can happen when you render an image in the beginning, and he's got an example where he basically renders what looks to be, kind of like a cross between a sphere and a hexagon in a 3 d contour and then a, cube and then the r logo. But when you render this first of a few parameters that he does with one of his, packages, you can see, yeah, they're little polygon shapes. They look like what I would see in that kind of first attempt at that virtual racing game. But then he starts to show example code, again, new to the ravers of called subdivide_mesh, this function which lets you define just how deep you do this subdivision.

And he starts with levels of 2, and then he, you know, re renders the image. And now, in the second image, you look the left image. Again, you want to look at this as we're talking through it if you have access to the screen. That first, ball on the left looks like the Epcot center or a golf ball and, on on the golf course. You can tell. You can see those little triangles splitter throughout, which is not what he wants. He wants to make this look completely smooth. So guess what? It's all iterative in these steps. He tries another subdivide mesh call with, you know, first trying to make things look a little more smoother without going new subdivision levels, and it doesn't quite get the job done. They still look, you know, a little bit off, but he's rounded out some of the edges to that aforementioned cube, but he's still gotta go deeper. And, basically, he finds this sweet spot of, like, subdivision levels of 5, which means this is upping the count on these little small triangle shapes that are composed in each of these images.

And going from the start to finish of this, that added many, and I do mean many additional triangles to each of these shapes as compared to what he started with. It's a huge increase, and, in fact, it was a 1024 fold increase in these number of triangles, which may not be the best for your memory usage in your computer, although I there are no benchmarks around that. But I know from my, you know, brief explorations and things like, what's a blender for rendering, 3 d and Linux and other software, that as you make these shapes more complex, yeah, your computer is gonna be doing a lot more work with that. So in the end of this example, he's got 3 versions of the the rendered scene, what he started with, and then one in the middle, which is kind of like a compromise of like the subdivision levels and kind of moving some of these vertices around, and then the last one of using like all these triangles at once.

The good news is is that this shortcut approach in the middle, you can't really see a visual difference of the naked eye between that and the more complex version with the additional triangles. But the key point is that, a, Ray the Rayverse packages support this, and you're gonna have to experiment with it for your use case to get that sweet spot of the smoothness that you're looking for. So that was a great example how you want to give this kind of smooth appearance without those artifacts fault coming into play. Now, we turn our attention to the bumpier contour surfaces with, say, the moon as inspiration.

And he mentions that this will be familiar to anybody who deals with GIS type data in the past with elevations. He has a nice render of both the moon image as well as, moderate bay where you can see the contours very clearly in the black and white images, And then he starts by drawing a sphere of what looks like the moon, but when you look at it, it just looks like a very computer graphic looking moon with, like, a shade on the left and light on the right, but you would not tell that's anything really. So then comes the additional kind of experimentation of, okay, how do we first figure out what is the current displacement information that's in that image so you can get a sense on what's happening here and how many pixels are being sampled to help do this rendering by default.

So there are some handy functions that he creates to kind of get into this metadata. And he notices that in the first attempt, there's only about point 0 9% of the pixels from that moon image are being sampled to produce this more artificial image, and that's why it's not looking like a moon at all at that point. So gotta up the ante a little bit. Again, kind of like a iteration of this to figure out, okay, maybe I need to use more pixels to sample in this. It starts going from like 1.4% of them, Still not quite looking right as you look at the post. Ups it further, and he's got a handy function called generate moon mesh that he kinda ups the levels 1 by 1.

And eventually, he gets to a point where, hey, we're now we're getting to what looks like the craters on the image where about 5 or 6% of the pixels are being sampled. Still not quite there yet, but he ups the ante even more with this, levels. And then he finds what looks like, you know, a really nice looking moon where it ends up sampling about 89% of the pixels, which means that there is a lot going on behind the scenes in this image. And in fact, this would take up a lot of memory, And you can see there is kind of a there's a point of diminishing returns the more pixels you subsample here for this displacement. Not really a lot of change visually. So ends up, he finds a sweet spot between not going to the extreme of, like, 2,000,000 pixels resampled somewhere in between.

And hence, he's got a really nice visual at the end where you can see it it's getting getting much closer once he brings us back to the sphere shape. And there's a nice little phenomenon kind of towards the end where it looks like at each of the north and south poles of the moon, when you do it by default, it kind of looks like things are being sucked in like a vacuum. It's hard to explain. But then when he does the applying the contour more effectively, you see that it just looks like any other surface on the moon, nice little craters and whatnot.

As you can tell, I've been doing my best to explain this in an audio form, but what's really cool is that you can see kind of Tyler's thought process of when you have a problem he wants to solve with his raver suite of packages. These steps that he takes that go from start to finish, not too dissimilar to what I see many of the community do when they teach things like ggplot2. Start with your basic layer, start to customize layer by layer bit by bit until you get to that visual that you think is accurate given the data you're playing with. And in case a toddler's visual is here, to closely mimic what you might see if you put your telescope out there and look at the moon at night. So, again, a fascinating look to see the power of r in graphics rendering, which if he had told me this was possible 10, 15 years ago, I would have laughed in your face. But this is really opening the doors, I think, to many people making so many sophisticated images, not just in the 2 d plane but also in 3 d and really insightful walk through here. And you can tell when you read Tyra's writings just how much time and effort he's put into these packages to make all these capabilities possible. But yet with, you know, geological and very fit for purpose past.

So a lot to digest there, but, again, I think it's a very fascinating field.

[00:14:20] Mike Thomas:

Eric, I I couldn't agree more. I'm always fascinated by Tyler's work on this particular project. I think, you know, when the raverse sort of first came out, it was pretty revolutionary, I think, to the our ecosystem. You know, we had ggplot, and we could do, you know, a a lot in in ggplot and some of the other plotting packages that were available. But in terms of 3 d visualization, animations, things like that, you know, we never had anything like that as far as I know until Tyler came along. So these suite of packages in the in the Rayverse and in particular the ones that he's highlighting today and their new functionality are RayVertex and Ray render are pretty incredible.

I have tried to do some of this myself in the past. I think, I think you can expect your laptop fan to to a little bit as you try to do this. So get your GPUs ready, if you're especially if you're trying to add, you know, additional complexity and do some pretty in-depth things within these packages. But the the fact that we can do this at all is is absolutely incredible, and I loved the callback to sort of your early, you know, eighties nineties video game experience because that is that exactly what a lot of this blog post reminded me of as he leverages that subdivision levels argument in the subdivide mesh, function.

And you can sort of see how the the pixelation just starts to smooth out as that that number increases in terms of how many, subdivision levels are being used. And it's it's almost, you know, conceptually, like, following video game graphics from the eighties nineties to the 2000 as you sort of get away from these these triangles and the pixelation into something that looks, you know, much smoother, into the naked eye. You you really can't even see the pixelation at all. Great summary by you. I'm not sure if there's a whole lot more to add to it. It's an incredibly visual blog so it's difficult for us to do it spend a lot of time in, you know, 3 d visualization, I think you'll still find it fascinating.

[00:16:35] Eric Nantz:

Yeah. And it does remind me there is ripe opportunities that we often see shared on social media from time to time. They'll call it, like, accidental art when you're trying to do a neat plot, yet it doesn't come quite outright, but yet it's so interesting to look at. You wanna post it anyway. These things are ripe for accidental art, especially if yours truly is the one coding it up. But, I do think as you look at the evolution of these images, back to that racing example, it's like when I saw that virtual racing game back in the early nineties and, again, you could tell it was like Sega's, you know, first real attempt at making somewhat perform at Polygon renders.

And then it was like a couple years later, those of you who remember that Daytona USA racer game, it looked real. Like, everything was smooth. Everything was sharp. Everything is 60 frames per second. That is like you can have that same transformation as you play with these displacement, parameters and, again, the contour parameters. It's it's fascinating. It's absolutely fascinating. And what, who would imagine r could be an engine for graphics like this? And I've been following on social media kinda the other side of the spectrum where it's a slight tangent here, but Mike FC AKA Cool But Useless have been making all these packages that help me make sprite rendering even more easier in r itself.

And it's like, my goodness. We can make almost any type of game in r now. It's just a matter of time, really.

[00:18:08] Mike Thomas:

Absolutely. It's it's incredible stuff. Incredible stuff. And I do remember Daytona USA very fondly. How much fun was that?

[00:18:16] Eric Nantz:

Oh, lots of quarters of spending that one, and then, of course, the computer racers have just eat my lunch. But that was part of the design to get your money back in those days. So we're going to shift gears quite a bit here, to get into more of the data side of things because there are some really promising developments in some of the ecosystems that Mike and I have been speaking really highly about in recent episodes of our weekly highlights. And in our next highlight here, we're gonna go back to, the now getting a lot of traction, so to speak, the DuckDb paradigm, the DuckDb database that just got supercharged with a new plugin and a new capability of using a service that's new to us from the our side of things.

So and it happens to be called Mother Duck, and that's an awesome name. But, Mike, what is this all about?

[00:19:17] Mike Thomas:

So Mother Duck is a way to run as far as I know. It's sort of a whole cloud based data warehouse system, or platform, from the developers of DuckDb themselves that allow you to not only store data, in the cloud but to also run DuckDV itself in the cloud so that you don't need DuckDV installed on your own laptop. You just need to be able to authenticate into your Mother Duck account that you have, and you can run, you know, serverless cloud based analytics using DuckDb, but not needing anything installed on your own laptop which is is really cool besides, you know, the the packages to authenticate into it. So currently, and and, you know, one big component of this that that's noteworthy that I think we should touch on is DuckDV just released version 1.0.0 on June 3rd.

So this is incredibly exciting, you know, for the folks that have been following this project, for the folks that have been working on this project that we have a first sort of major, release of the project. And it's, you know, I think it's a a framework that we've seen, play out a few times, you know, in the data science ecosystem where a team will develop an amazing open source, framework or functionality. I think we've seen this, with the DBT package. If you're if you're, familiar with DBT software that I think allows you to do, more versionable ETL, They also have, I believe, a paid offering of that as well. I know that the team at Pymc sort of has some similar things going on, and it looks like the DuckDV team obviously has this incredibly powerful open source software that we can now use for doing some pretty incredibly efficient, ECL and and SQL querying on large datasets in, like, no time at all. But they've also built out, obviously, a, sort of privatized side of the business that allows them to to hopefully make money and to continue to, enhance DuckDV itself and that's this Mother Duck offering that we're talking about here. So there's pretty good documentation on, how to connect to your mother duck, service from Python but it looks like there's not necessarily any documentation out there yet on doing this in R.

Although the author of this blog post, Novikha Nakhov, notes that it's it should be pretty straightforward to do so, if you follow sort of the the Python framework and just adopt it leveraging, you know, what we know about how to connect to DuckDV databases using r. So that's what, Novica is doing in this blog post. He is outlining how you would go about connecting to a mother duck instance from our, leveraging our 441, on Linux with DuckDB version 1.0.0. It's pretty easy to connect, to, you know, a DuckDb database and in memory database and he has a nice little code snippet of how to do this, how to populate that database using a data frame, and then how to query it using SQL syntax. And, it also notes that we do have the new duckplier package that would allow you to do this in a more dplyr like approach as opposed to having to write a sequel string in your db get query argument. But for the purposes of this blog post we're not going to explore Duckplier.

So I Novica details the Python approach first to connecting to to mother duck, and really it's pretty simple. And this Mother Duck Extension actually comes native to the Python duckdb package. And it's just a little one liner essentially that allows you to connect to your mother duck instance. You'll automatically get a notification in the terminal telling you what URL you should enter in your browser to be able to authenticate to your mother duck service, and then you're essentially good to go from there. In R, it's a little little trickier. The syntax looks pretty similar. It's a a one liner to try to connect to your your mother duck instance.

But one of the first things that you have to do is actually from the terminal, I think write a little bit of code, to create a local database that's called md or shorthand for for mother duck. So the reason that you have to do that at nova deposits is that, the duck dp package for r doesn't automatically figure out that it needs to load this additional mother duck extension the way the Python package does. So you have to sort of do that yourself but, again, Novica has all the code for us to do that here and it's it's fairly straight forward. You have to run a DB execute command to load this mother duck extension and then you're pretty much good from there, to be able to connect your mother duck instance and write your SQL queries that are going to execute in the cloud as opposed to locally.

So it's a a fairly, you know, straightforward blog post. It's really really helpful, I think, for those of us that are exploring DuckDB and want to try out Mother Duck. I will note that there's a free tier of the mother duck offering that gives you I think 10 gigabytes of storage, up to 10 compute unit hours per month which is probably a lot, you know. I'm not sure, you know, we know how fast DuckDV queries execute. That's right. Most of my DuckDV queries even on parquet files, you know, that are gigabyte, 1 or 2 gigabytes in size will run-in maybe a second or 2, and there's no credit card required for this free offering.

So there's no reason to not try out this mother duck service if it's something you're interested in. And if you need to go up to, the the paid tier, it's $25 a month per organization, I guess not per user, which is pretty incredible. If you're an organization that wants to leverage this mother duck service, that gives you a a 100 gigabytes of storage and a 100 compute unit hours per month, which I, you know, unless you're doing some pretty crazy stuff, I can't imagine that you would you would hit that. So I think it's a very sort of generous price point that we have here, and, obviously, it's amazing that we have this free tier that allows you to to try it out first, if you if you want to and and maybe that's all you need as well. So pretty incredible. Great walk through by NovaCon on how to use this in R because up until now, I don't think there's been any documentation on how to connect to, the mother duck service from our and it seems like there there was a little tricky hack that he pointed out. That's definitely going to help. Those of us who wanna try this out ourselves, probably save us a lot of hours. So we're very grateful that he went down that rabbit

[00:25:57] Eric Nantz:

That's for sure. Ever since 1.0 was released. And I do recall, that's for sure, ever since 1.0 was released. And I do recall having to do some extension installation in my explorations a few weeks ago where I had to install a couple extensions, one of which was to help interactive object storage in AWS that didn't come by default. But, yeah, it's a similar syntax with what, Novica outlines here in this post. And, yeah, I guess, I'm interested in exploring mother duck to just see, you know, on a on a very basic basis just kinda how it plays in my workflows. One thing I'm immediately thinking of, Mike, is that if you're an organization or you're a smaller or big company and you wanna leverage DuckDV as your database back end for things like shiny apps or whatnot. It sure does seem like having that hosted in a data warehouse would be an intriguing idea to help offset some of that storage footprint from you to that to that vendor. Of course, we were remarking in the preshow that, you know, you you wanna at least have, like, a couple, you know, plans, if you will, of where you host these files because, you never know what can happen on hashtag just saying.

But in any event, what's nice about this service, I think they have some examples I was perusing yesterday where they did hook up a couple I don't know. It was either observable notebooks or some other custom web apps with, say, the New York City taxi data and doing simple queries on that and some drop downs. And it was, as you said, blazing fast with these queries to get the results back to the browser and not making your browser do all the data munging itself. It's gonna, know, do that on on the server side. And it does look like they're also exploring interactions with, wait for it, WebAssembly too. So there's gonna be lots of interesting, I think, applications to play here. And, again, I'm intrigued to see to see where this goes in the future.

[00:27:59] Mike Thomas:

Absolutely. Me too. It's, an exciting space to watch. I've only dipped my toe in it. One really cool thing about the RStudio IDE is if you do have a dot SQL file in your RStudio IDE, every time that you save that file if you have a more recent version of RStudio, it'll re execute the query for you and show it up in in sort of the query output pane of our studio and that works great in duck DB as well I was playing around with it against parquet file, just editing my my DuckDV query and seeing the results come out real time without even having to, like, highlight the code and and click run or anything like that. It's pretty incredible.

[00:28:40] Eric Nantz:

All these save so much time when you add it all together. That is for sure. Did you say parquet files, Mike? I did, Eric. You sure did, man. That's a perfect segue to our last highlight today because, honestly, one of the building blocks that we have been, you know, espousing the praises of in many recent episodes is parquet as kind of this next generation data storage format. And, certainly, when you hear us talk about a lot of the use cases are when you have a collection of large data that you can do pretty, you know, sophisticated group processing with. So you can get really fast and and real time kind of analyses, being executed on it. But that's not the only use case because our last highlight here is, awesome update to the nano parquetr package, created by Gabor Society, a software engineer at posit. And his latest blog post talks about some of the key updates to this package and some of its use cases. So, Mike, why don't you walk us through what's new here?

[00:30:01] Mike Thomas:

I'd be happy to, Eric. You know that I am, want to sing the praises of the parquet format and everything around it. So very excited about this Nano Parquet. Our package and the 0.3.0 release, that Gabor worked on, I believe, Hadley may have worked on it as well and some others, on the the Tidyverse team at Posit. So one of the reasons that they created the Nano Parquet package is that, they wanted to be able to, you know, interop with Parquet files without necessarily needing, some of the the weight that comes along with like the arrow package because the arrow package does have a fair amount of dependencies on it and it can be a little heavy to install.

So one of the things about this nanoparquet package is that it has no package or system dependencies at all other than a c plus plus 11 compiler. It compiles in about 30 seconds into an R package that is less than a megabyte in size. It allows you to read multiple parquet files which is in a traditional sort of data lake, structure very very common, with a single function, you know, sort of like what we have in the reader package. I I don't know if this is little known or if it's well known but if you just point that to a directory instead of a file it will read in all of the files in that directory assuming that they have the same schema, and essentially append the data together into a big data frame. And that's the same thing that the nanoparquets readparquet function will do for us. It also supports writing most our data frames to, Parquet files and has a pretty solid set of type mapping rules between our data types and the data types that get embedded as metadata in a parquet file, which is, you know, again, another reason why, you know, parquet format exists and I think was another part of the motivation for this nano parquet package that it gets a little bit of a reputation, the parquet format, for only being for large datasets.

Like, you only wanna use parquet file format when your your data is big. And, I think what Gabor is trying to argue here in this blog post and that I would agree with is that that's not necessarily, you know, the case. And I think that anyone can make a case for leveraging the parquet for file format for storing data of of any size. And one of the, I think, big reasons for that is, the, you know, ability to not only have the data in the file but also metadata around the data including the data types such that when you hand that parquet file off to to someone else, they don't have to in their code the the way we used to do when we read a CSV and and define what the data type for each column that's coming in. You don't have to do that because it's embedded in the file itself. So we have a lot more safety around, you know reading data frames and writing data frames and ensuring, portability, you know, not only between languages but between users essentially so that, you know, what you see is what you get.

So I I think that's really helpful. You know, we know that Parquet is performant and typically a smaller size than, you know, what you would store in a traditional delimited type of file, which is is helpful as well. And, you know, there's additional things like concurrency, a parquet file can be divided into to row groups so that parquet readers can can read, uncompress, and decode row groups in parallel parallel as Gabor points out. So just a lot of I think benefits for leveraging the parquet file format and I think this is maybe one of the first blogs that I've seen make the case for the parquet for file format for small data as opposed to big data. So I'm super excited about the nanoparquet, package because I think that there are some particular use cases, you know, potentially Shiny apps that I'm developing that are maybe reading a a smaller dataset where I don't necessarily need to, leverage the whole Arrow suite of tools and maybe I just need to to read that data set in, you know, one time, from a parquet file, and the nano parquet package might be all I need.

[00:34:23] Eric Nantz:

Yeah. I'm I'm with you. I'm so glad they give, you know, the spotlight not just to the the typical massive, you know, gigabyte gigabyte datasets. I think what Nanoparque is doing here, again, great for the small dataset, situation, but I really like these metadata functions that are put in here too because I think nano parquet could be a great tool for you, especially being new to parquet, getting to know the ins and outs of these files, knowing, like you said, the translation between the r object types and the parquet object types in the dataset. There are lots of handy handy functions here that maybe they are part of the arrow package. I just didn't realize it, but to be able to quickly have these simple functions that grab, say, the schema associated with parquet, grabbing the metadata directly in a very detailed printout of, you know, a nice tidy data frame of these different attributes. I think this is a great way to learn just what are the ins and outs of this really powerful file type. And, of course, Gabor is quick to mention some limitations for this. He does say he probably wouldn't recommend using this to write large parquet files because this has only been optimized for single threaded processing.

But with that in place, I still think this is an excellent way to kind of dive a little bit or really deep into what are the ins and outs of parquet. And then when you're ready for that transition, say, to a larger datasets, he obviously at at the conclusion of his post calls the Arrow project out as well as DuckDV out. So you've got it all it all leads somewhere. Right? You may your your situation may be that Nanoparquet is gonna fit your needs, and that's awesome. More power to you. And then as you escalate your data sizes, then you can still use par k. You're just maybe transitioning to a different package to import those in or the write those out. So I think it's it's a great introduction piece as well as I'll be honest. I'm putting on my life sciences hat for a second.

I would absolutely love to use parquet format as opposed to a certain format that's in that ends in 7 b that. You know what I'm talking about, those out there. This would be a game changer in that space.

[00:36:50] Mike Thomas:

I mean, the word parquet just sounds a lot smoother than, you know, SaaS 7 beat at. It it sort of sounds like, I don't know, r two d two or or I don't know. You know? Well, at least r two d two out of personality. Oh, goodness. Let's switch quickly and one acknowledgment, that they that Gabor makes in this blog post that I do wanna highlight just for the sake of talking about how small of a world it is out there in the data science data engineering community is nanoparquet is actually a fork of an R package that was being worked on called mini parquet, and the author of mini parquet that r package was Hans Nielsen, who is the founder of DuckDV.

[00:37:35] Eric Nantz:

Well, well, well, it all comes full circle, doesn't it? Oh, this is what a fascinating time. Awesome awesome call out. And I believe Hans will be keynoting at positconf this year, if I'm not mistaken.

[00:37:49] Mike Thomas:

You are correct. Yes. I As well as like every other data science conference, I think, this year.

[00:37:56] Eric Nantz:

He's been a busy man for sure.

[00:37:58] Mike Thomas:

Deservedly so.

[00:37:59] Eric Nantz: