Another way to hop on LLM train with the chattr package, a clever use of defensive programming to get to those warnings in your tests faster, and a major milestone for the R-Hub project.

Episode Links

Episode Links

- This week's curator: Tony Elhabr - @[email protected] (Mastodon) & @TonyElHabr (X/Twitter)

- Chat with AI in RStudio

- Test warnings faster

- R-hub v2

- Entire issue available at rweekly.org/2024-W16

- R/Pharma 2023 presentation by Edgar Ruiz (GitHub Copilot in RStudio) - https://youtu.be/-Fjb8LZmTSI

- The 2024 Appsilon Shiny Conference is just days away! https://www.shinyconf.com/

- Use the contact page at https://rweekly.fireside.fm/contact to send us your feedback

- R-Weekly Highlights on the Podcastindex.org - You can send a boost into the show directly in the Podcast Index. First, top-up with Alby, and then head over to the R-Weekly Highlights podcast entry on the index.

- A new way to think about value: https://value4value.info

- Get in touch with us on social media

- Eric Nantz: @[email protected] (Mastodon) and @theRcast (X/Twitter)

- Mike Thomas: @mike[email protected] (Mastodon) and @mikeketchbrook (X/Twitter)

- Memories of a Master - Street Fighter II: The World Warrior - Captain Hogan - https://ocremix.org/remix/OCR02268

- Higgins Goes to Miami - Adventure Island - virt - https://ocremix.org/remix/OCR00461

[00:00:03]

Eric Nantz:

Hello, friends. We're back with episode a 161 of the RWKUHollwitz podcast. And depending when you're listening to this, you might get this a day earlier than expected because yours truly has the, day job going on. We have a couple on-site visits, but, nonetheless, we're gonna get an episode to you very quickly here. My name is Eric Nance. And as always, I'm delighted that you join us from wherever you are around the world. And I'm joined at the hip as always by my awesome co host, Mike Thomas. Mike, how are you doing today? Doing well, Eric. Happy tax day for those in the US.

[00:00:33] Mike Thomas:

Having a pretty exciting day today because we're bringing on a new team member at Catch Brook Analytics, and if you're a small team, you know how big of a deal it is to to bring on an additional person. So very, very excited about that. But, yeah. Looking forward to a great week.

[00:00:47] Eric Nantz:

Congratulations, Mike. I know a lot of stuff goes on behind the scenes to make those those acquisitions happen, so to speak. And sounds like you're you're gonna be teaming up quite well. And as always, if you wanna see what Mike's up to, yeah, I saw that in his LinkedIn feed. So definitely check that out. Thank you. Nonetheless yep. Nonetheless, we are gonna get on the business of our weekly here, and we our issue this week has been curated by Tony Elharbor, another one of our longtime contributors to the project. And as always, he had tremendous help from our fellow our our weekly team members and contributors like all of you around the world.

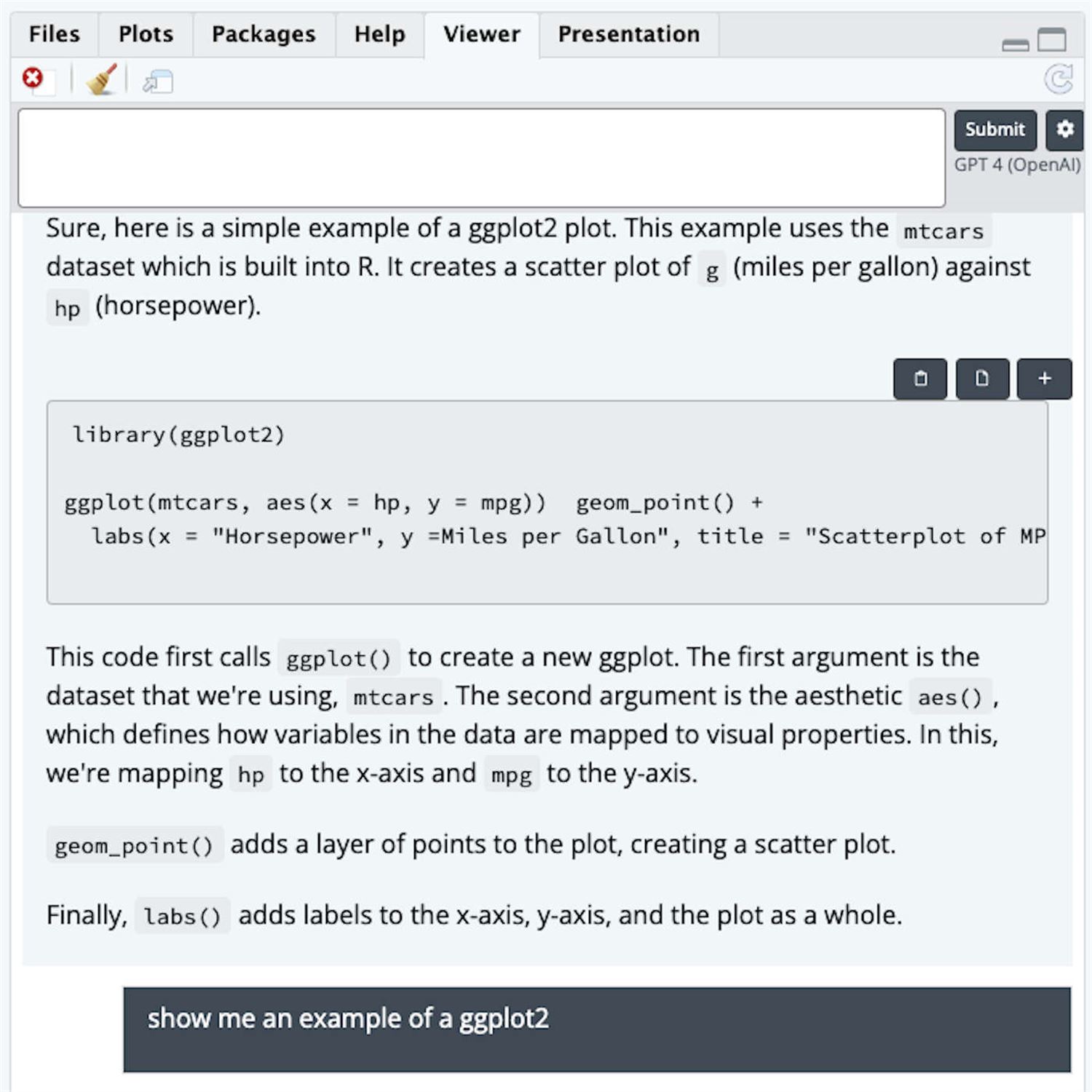

And as you recall, Mike, this is something we talked about last year, I believe, is that there was a pretty big splash across the entire tech sector with large language models and artificial intelligence. And the Pazit group themselves had made a big splash when they announced how they were integrating chatgpt with their RStudio environment. And that's just one piece of the integration here because there is a lot more details, as they say. And that's the subject of our first highlight, which is all about the chatterrpackage, which is coming from the author of this post as well as the author of the package, our posit software engineer, Edgar Ruiz. And he tells us all about how you can chat with AI and large Angular models directly in RStudio itself via the Chatter package.

Then on the 10, the easiest way to interact with it is once you install the package and you link it to the model of your choice, and more on that a little later, you will, by default, be able to bring up a handy little shiny gadget in the panel of your rstudio IDE. And it will look very familiar to those who have used things like chat GPT and other models online. You give it your prompt, hit enter, and then you're going to get some feedback back. And it does do some nice things under the hood that's tailoring it to, say, getting, say, our code for maybe you're developing a package or you're new to a certain package and you want a little snippet. It'll make it super easy for you to copy paste those snippets directly into your source prompt and actually going the other way as well.

Perhaps you're like me, and you like the right markdown for all your expirations of, like, your data analysis and exploring a package. Maybe you write that question in your markdown file itself. And also, the Chatter package also has some nice interactions with the Rstudio add in feature where you could highlight that, say, text. That would basically be a prompt if you, like, manually typed it in. Send that directly to Chatter, and then it'll act as if you just prompted it directly. So nice little bidirectional communication on that front as well. So very nice kind of user experience on that front.

Now the parts that I've been pretty intrigued by, especially as this technology starts to hopefully get more mature, although it still seems like moving at a breakneck pace, is that across multiple industries, across many of the people I listen to, there is a lot of interest in, you know, having the ability to self host your large language models and, hence, potentially being able to leverage the same technology without it leaving your company's firewall. And in my industry, that is a big, big deal because, we could get in big hot water if we if we transferred some confidential stuff in the OpenAI suite just on its own.

Well, that's where Chatter has probably my favorite feature is the ability to integrate with not just the OpenAI type models of Chat, GPT, and the like, but also self hosted models. In particular, they give first class support for the chat LOM models, I believe. And some of these are coming from Meta themselves. And in fact, in particular, it's called Wama GPTJ chat. Again, some of these are being contributed by Meta and their research group. But with that framework, you can plug in different models that you can download that are freely available. They talk about WAMA itself, GPTJ, Mosaic pre chain transformers. Some of these I'm very not familiar of, but the whole idea is that once you install these on your system, you just set a little configuration in the Chatter package to map to those models.

And then lo and behold, you'll be able to communicate directly in your network or even on your your computer itself without it again leaving the confines of your firewall or your own setup to go to another service. I do think that as this technology, yeah, gets more mainstream in industry, having that flexibility is going to be immensely important. And I'm very happy to see that even this early version of Chatter is bringing support for the local model execution, and they do encourage others to give it a try. And if there are models that they've seen online that are not supported yet, they are inviting, you know, issues to be filed on their issue tracker. And I'm sure Edgar and the team would love to pursue that further. But overall, certainly, as developing your R code, developing your package or data analysis, having a helping hand is never a bad thing. And Chatter gives you the flexibility of going straight to OpenAI or straight to your local system instead. So we're really happy to see it and can't wait to see where it goes.

[00:06:26] Mike Thomas:

Yeah. Eric, this is super exciting and I'm glad that you brought up the the point about the need for leveraging, sort of in house LLMs. You know, I think that in sort of non corporate settings, a lot of the times, right, or if you're just looking for help in your code, potentially, you know, you can leverage something like OpenAI or some of these these models where you are sending information to a third party service. Essentially, anything that you type is is probably gonna be captured and and used to retrain that model, if not for other purposes. And and, you know, that's okay a lot of the time. But if you are asking a question that's sort of specific to, you know, the the IP of your own organization or company, right, it's probably not a very good idea to do that. Your, your your information security team is probably not gonna be too happy if you start doing that.

So the fact that we have the ability to integrate here in our studio with some of these, you know, sort of, in house LLMs, you know, that that are open source and we can install and host ourselves is fantastic. I wasn't necessarily familiar with all of them. I do know that the Mosaic, pre trained Mosaic is a company that I believe was, you know, leading in this space and they were acquired by Databricks not too long ago. Then, I guess, the one other thing that I do wanna point out is if you are, someone who's sort of like me, who's been using Versus Code a little bit more, lately than RStudio, that's okay. You don't have to just be in the RStudio IDE. You know, this article highlights Chatter's integration with with RStudio's IDE.

Specifically, you know, all the screenshots are sort of Rstudio native, but, you know, this will all work outside of Rstudio as well, you know, for example, in the terminal. So it's a great walk through on on getting set up and and getting started. I think it's it's hugely helpful, you know. I have sort of mixed feelings as I'm sure you do about Eric. You do, Eric, about large language models and their utility. You know, I think I was probably a little bit more pessimistic about them when they first came out and I think they're growing on me a little bit to be honest. 1 I listened to a great podcast this weekend, that was with the chief operating officer of GitHub.

And long this is just sort of a side story, but I live in this this very rural farm town in Connecticut as you know, Eric. Not much around. We're not we're not close to to any cities or anything like that. And the chief operating officer of GitHub lives 4 minutes away from my house and I never knew. Wow. In a small world. I sent him a little LinkedIn message to see if we're gonna get together sometime but I haven't heard back yet so I I don't blame him. But, no. It was very interesting and one of the points that he was talking about which I hadn't necessarily considered before is you know we talk a lot Eric about you know maintaining open source software and how it can be thankless sometimes. Right? There's not a lot of payoff there. But he was thinking about a world where, you know, somebody opens an issue in your, you know, open source repository that you have and maybe, you know, Copilot or or some sort of LLM is able to immediately show you some code to solve that issue. And obviously, you can go in and edit it, but any time savings that we can provide to open source maintainers, I think could be a huge benefit to the whole community and especially, you know, the many folks that work on open source that really don't get much back for it.

So I I thought that was interesting. They're growing on me. I I love seeing this article come out because I I think that this integration is very timely and looking forward to continuing to watch, you know, how this space evolves.

[00:10:03] Eric Nantz:

Yeah. Certainly, I've been pessimistic just like you have, and I do think depending on the context, there there are ways that if you're expecting too much, it's not you're gonna be you're gonna be disappointed if you think it's gonna solve all your woes of development and also coming up with a magical algorithm to save your company's bottom line. Yeah. Yeah. We're not there yet. But, however, I can definitely see the point of bridging that gap between doing so many things manually, such as looking up the concepts of a different language as you're folding maybe a custom JavaScript pipeline or custom, like, Linux, you know, operation into your typical data workflow.

Having that helping hand to be able to guide you with additional development best practices or snippets that get you started quickly, I think, is still the the the type of low hanging fruit that I think cannot be understated how valuable it is to get that extra helping hand. I think over time as these models get, you know, more updated, more trained, hopefully, with best practices for training those that we're all going to benefit from. But again, to me, the flexibility to be able to pivot to the direction that you choose, I think, is is definitely paramount here. And, yeah, for you Versus Code fans, guess what? The Versus Code, extension for R does indeed have support for RStudio add ins. So you should be able to use Chatter kind of out of the box even in your Versus Code setup. I haven't tried it yet, but I've been able to use add ins in the past with things like Logdown and other packages that had these nice little add in integration. So, yeah, who knows? Give that a try, listener out there, if you're a Versus Code power user because, I would imagine Chatter is gonna fit right in there.

[00:11:54] Mike Thomas:

Yeah. It's a fantastic project and appreciate, you know, all the work that a lot of folks, it seems especially at Guru Ruiz, have put into this.

[00:12:01] Eric Nantz:

Yeah. You can definitely see it. And in fact, he actually gave a a talk at the 2023 r pharma conference about some of his developments. So I'll have a link to that in the show notes. Now we told you how, you know, using things like large diameter miles can make things a bit faster for your development. Well, another thing that you want faster, especially when things go haywire, is if you're in the package development mindset and you're starting to do the right thing. If you've been listening to us and others in the community, you write your function. You know that function is important.

Make a test for that, folks. It'll save you so much time and effort in the long run. Well, you may be in situations where you're writing a function that definitely has a lot going on, so to speak. Maybe it takes a lot of time to execute. Maybe it's impact it's interacting with some, you know, API that needs a little time to digest things. And you wanna still keep in the workflow of being able to test this efficiently, especially when things go haywire. And our next highlight here comes from Mike Mahoney, a PhD candidate who has also had definitely more than a few contributions to our weekly in the past where he talks about some of his recent explorations in helping to decipher the ways to fail fast in your test with respect to custom warnings and errors.

And what we're talking about here is that typically speaking, when you have a function that's depending on some kind of user input, you probably wanna have some guardrails around it to make sure the inputs are meeting the specific needs of that back end processing. And if you detect that something's out of line, you will often wanna send a message to the user or in some cases, even a warning or an error right off the bat to the user that something is not right. They're gonna have to go and try it again. Well, maybe it is more of a warning concept or maybe they'll still get a result, but it may not be quite what they expect. And what Mike does in his example here, he has a little mock function that is intentionally meant to take a while, but he's making clever use of the rlang package to not only display the message to the user, but also attach a custom class to that message.

I glossed over this years ago when I got familiar with Rlang and CLI, but this is a handy little feature because if you attach a class to this type of message or warning or error in your test to determine if that said warning or error actually fires, instead of having to test for the verbatim text of that message that it was showed to the user, you can test for that class of a message, which might be handy if you have more than one of these in a given function. Now that on its own, he's got an example where he builds a test to do that in his test suite. But there's one bit issue that, again, if you have an expensive function you're going to run it into, is that the function is still going to try to go through the rest of the processing even if your test for that warning is successful.

Well, how do you get it so that you wanna, say, escape the hatch, so to speak, when that warning occurs? That's where the the rest of the example that Mike puts together here. He develops a nice little trick that's making use of the try catch function, which comes in base r, and it's a way for you to evaluate a function. And if you detect that there's an error, you can tell what to do in that post processing. So what Mike does in this example is he takes the detection of that warning condition in his function, which, in this case, is making a huge number with, going above the machine integer max.

And then in his try catch block, he's got, okay, he expect an error that that error being actually a warning message instead of a typical error. So he does a little clever thing here, and this is not modifying the function itself. It's modifying the test that declaration of that function. So the function itself still has that nice, succinct syntax of if the integer is too big, send the warning, carry on. But it's in the test that block that he switches it a bit and says, I'm gonna expect that error for that warning condition. And that way, that the warning does indeed output as I expect. It's just gonna get out, and then I get on with my day, so to speak.

Now this may not be a one size fits all for everybody because maybe there are cases where you're still gonna wanna test if that function does perform successfully. But I could definitely see as you're in, like, the iterative development workflow that you'll want that flexibility to say, okay. I just wanna test for my air conditioning first and then not get bogged down with the execution time in that iterative process. And then when I feel like I'm confident enough, then start building in the rest of the testing suite. Again, testing and development practices can be a highly personal thing, but I just love the use of these built in features of r to kinda make your day to day a bit easier in your testing suite. So a short example here, easy to digest, but I think it's got immense value, especially if you're in your early days of building that really

[00:17:44] Mike Thomas:

extremely clever by Mike and I appreciate him putting this example code together to demonstrate this this use case. It sort of reminds me, about a concept that maybe my Elle, Salmon was the the author behind, but just the concept of thinking about an early return as opposed to return right at the end of your function, you know, if some condition is met then, return early instead of executing all the rest of the code. So, great example here by Mike. I really encourage folks to to check out the example code. It's sort of hard to do it justice in a in a podcast. But this is really easy, base our code for the most part, a little bit of our lang and test that that you could run natively if you wanted to and and see, the time difference here that Mike expresses.

[00:18:29] Eric Nantz:

Yeah. I'm again, great nugget of wisdom here that I'm gonna be taking into my packages that I have to deal with either launching, say, a complicated model or interacting with an API that I have no control over, who maybe those engineers didn't exactly make it efficient on the back end. So if I wanna fail, I wanna fail fast, and hence, it's gonna be a nice little snippet that I can take into my dev toolbox to make it fail fast and still keep that function pretty pure in the process. And speaking of making things easy for you, again, going back into the package development mindset, you've done that awesome work. You've got that package ready to go.

And then you're at that point of, am I really ready for CRAN yet? It can be a daunting task, but there are there's been an existing resource for us in the R community for over 8 years that has been immensely helpful as another sanity check to our package, especially with respect to different environments and different architectures. Well, what we're the project we're talking about has been the Rhub project, which has been led by Gabor Saasardi, a software engineer at Pozit. I believe Ma'al Salmons also contributed heavily to this project as well. Well, they have a very big milestone right now in our last highlight here because they are officially announcing in their blog post is that they are moving to R hub version 2. And, like I said, the impact that Rhub has had on the community itself cannot be understated with respect to package development and helping authors really get to the nitty gritty of how their package is gonna work across different architecture.

And this has a rich history. As I said, this was actually a project funded by the R Consortium, which I've spoken highly about all the way back in 2015. So it has been standing up for years. Now why is this version 2 so exciting? Well, they are making major pivots to their infrastructure where a lot of this has been, in essence, self made or homegrown in terms of architectures for containers and servers. Now they are piggybacking off of GitHub actions to help automate many of these same checks and more that they've been doing in our hub over the years. And if that doesn't sound familiar, there has been another project we've been speaking highly about called r Universe that is also heavily invested in the GitHub Action route. So now that's 2 major, major influences in the development, community for r that are making a big headway here.

Now you as the end user, I. E. The package author wants to leverage this version of rhub. What do you have to do? The good news is there's always been a package called rhub literally in our ecosystem itself. You just gotta update that package to the latest version that they're announcing here. And then I believe you might have to reregister for interaction with the service. But if you've done it before, it should be a fairly seamless operation. And then the mechanism to you as the end user is exactly the same. You're gonna be able to supply or request that your package be checked in a more custom way. More on that in a minute. But we have a special configuration file that goes to your GitHub repository.

Assuming your package source code is on GitHub, it'll be like a YAML file that in your main branch of the repo and optionally any custom branch that on every push to that said branch, it'll trigger in GitHub actions these other rhub version 2 checks. So then you can check the progress on that in the GitHub actions dialogue much like you would in any other GitHub action. I've been in I've been living in GitHub actions over the years, so it's becoming much more comfortable to me, albeit still not 100% yet. But again, they're taking all the hard work for you. You just got to put this configuration in your repo and the R Hub package itself will let you do that very quickly. And like I said, there are opportunities if you don't want to host your package itself on GitHub and still want to take advantage of something like GitHub Actions, there is a mechanism, a path forward for you as well where you can leverage the custom runners that the R Hub team has put together that are hosted under the R Consortium itself as these on demand runners, which would be similar to what you saw in the previous version of RHub.

But this is useful if you want to keep your package outside of GitHub and still want to leverage these kind of checks. There are a couple of caveats, though. Once you opt into this kind of check, obviously, the checks that are being run-in this Rhub, GitHub, Org under the R Consortium are still going to be public. So if you're okay with that, great. But, that's just something to keep in mind. With that said, I do think that this is gonna help the team immensely with their back end infrastructure. And, hopefully, you as the end user will be able to seamlessly opt into this, especially if you've already been invested in rhub. It should be a pretty seamless operation. And if you're new to rhub, it sounds like this is gonna be even faster for you and even more reliable and, hopefully, you know, get you on your way with more efficient package development.

So big congratulations

[00:24:09] Mike Thomas:

to Gabor and Myo and others on the rHub team for this, terrific milestone of version 2. Yeah, Eric. This is super exciting. You know, we've talked about this before, but the the need to be able to check your package and ensure that it it works and doesn't throw no any warnings or or notes or anything like that, across multiple different operating systems and setups ups that are that differ from your own, right? Which is really sort of before this, one of the only places that you could check, your package is is fantastic and it's it's super powerful. It looks like the rhub check function is a really nice interactive function, tries to identify the git and Github repository, that belongs to the package that you are trying to check. And then it has this whole list of it looks like about 20 different platforms that you can check your package on. And you can check it against as many, I think, of those platforms as you want. Just entering into the console, sort of the the index number for each platform, comma separated, that you want. One of the interesting things, Eric, I don't know if you know the answer to this, but if I'm looking at, you know, checks 1 through 4 here on Linux, Mac OS, Windows, It's it's it says that it's going to check that package against our and then it has a little asterisk, any version. So does that mean that, deeper within your your, maybe, YAML configuration file for your GitHub actions check, you're able to specify the exact version of r that you want, or is this sort of choosing the version of r for you?

[00:25:45] Eric Nantz:

I'd imagine it's probably choosing for you, but I wouldn't be surprised if deep in the weeds you are able to customize that in some way. I just haven't tried it myself yet. Got it. No. Because that would be interesting because I have a sort of a use recurring use case where I feel like we need to check

[00:25:58] Mike Thomas:

against maybe 3 versions, you know, the the latest major release of our probably the next beta release as well that's coming and then and then the previous, major release as well because we know some folks in organizations sort of lag behind. So we wanna make sure that everything's working across, past, present, and future, if you will. So I wonder if I'll have to dig a little bit deeper into it. Maybe next week, I can could share my results on, the ability to specify particular r versions here. Anybody on Mastodon that wants to chime in, feel free as well. But, yeah, this is fantastic and, you know, there are as you mentioned, some of those limitations of your package being public. You know, if you do want to keep your package private and you have it in a private GitHub repository, you just wanna use the rhubsetup and rhubcheck functions instead of the rc submit function, and that should be able to keep all of your your code private for you and take care of that. But this is really exciting stuff, from the R Consortium and or from the R Hub team, and really appreciate all the work that they have done, to allow us to be able to more robustly, develop software, check that our software is working, and build working tools for others to use.

[00:27:16] Eric Nantz:

Yeah. I'm working with a teammate at the day job that we're about to open source a a long time package in our in our, internal pipeline and hopefully get it to CRAN. And they were asking me, yeah. What's this rhub check stuff? I was like, oh, you're asking me at the right time. They just released version 2. So we're gonna have a play with that ourselves probably in the next week or 2 and see how that turns out for us. But, yeah, we're gonna be putting that on CRAN. So between that and our universe, I think we're gonna be in good hands, so to speak, to prepare for that big milestone for us. That's exciting. And I do wanna point out that there is also it looks like a a very new, but a nice place to potentially ask questions as you kick the tire on this is a discussions board in the GitHub repository

[00:27:58] Mike Thomas:

for our hub. So definitely check that out.

[00:28:01] Eric Nantz:

Yeah. This is, again, very welcome to get that that real time kind of feedback, you know, submitted. So definitely have that. Check out the, of course, the post itself, and you'll get a direct link to it. Having this dialogue early is going to be immensely helpful to Gabor and the team to make sure everything's working out correctly and, of course, the help with the future enhancements to our hub itself. And you know what else can help you all out there is, of course, the rest of the rweekly issue. We got a whole smorgasbord of package highlights, new updates, and existing packages, getting major updates, tutorials, great uses of data science across the board. So it'll take a couple of minutes for our additional finds here.

And as I've been leveraging different, you know, data back ends for my data expirations, especially with huge datasets, I've been looking into things like, you know, of course, historically SQLite, DuckDB now, you know, Parquet. It's all it's all coming together as they say. Art Steinmis has a great post on our weekly, this issue, about the truth about tidy wrappers. It's a provocative title, but if you ever want a technical deep dive into kind of the benefits and trade offs of performance and other considerations with some of these wrappers that you heard about that say, let you use dplyr with, say, you know, relational databases, parquet files, DuckDB.

This post is for you. It is a very comprehensive treatment, lots of example metrics so you can make an informed decision as you look at the datasets you're analyzing in your particular project and seeing if it's a right fit to stick with the wrappers or to go full native with the respective database engine. So really, thought provoking read, and great post by Art on that one. And, Mike, what did you find? No. It's a great find, Eric. I found

[00:29:56] Mike Thomas:

a blog post by Hadley Wickham, announcing Tidyverse Developer Day 2024.

[00:30:01] Eric Nantz:

Woo hoo. Yeah. This is exciting. It seems like it's been a little while since they had a Tidyverse Developer Day.

[00:30:12] Mike Thomas:

On August 15th. And if you're curious about what Tidyverse Developer Day is, it's just a really open communal day of folks, sitting and learning together and coding to try to promote contribution to the Tidyverse codebase. They're going to provide everyone with food and all you need to do is is bring your laptop and your, you're yearning to learn, so to speak. So it looks like, anyone can attend if this is regardless of whether you've ever created a pull request before or as Hadley says or if you you've already made your 10th package. So we're welcoming beginners to intermediate to advanced folks. Anybody that's interested in sort of the concepts of maybe con just contributing to open source or contributing to the Tidyverse, specifically.

I'm sure that they will have, many sort of issues already labeled and and ready to go for sort of, low level beginner stuff to probably more advanced stuff if you really wanna get into the weeds of the tidyverse. It's gonna cost $10 and and they say that really that's just because, they don't want people, you know, sort of just a lot of people taking a ticket and and not necessarily showing up. They're trying to encourage, you know, some commitment to the folks that actually say that they're going to, register for this. And and I think they're looking forward to, this day. And I'm going to to try to make it if possible. I'll have to see if the logistics all line up. But this is super exciting. Just another thing that I love about open source. The fact that, you know, they're going to have a day dedicated to this, a very open sort of forum for folks to help contribute to code that's going to get used by, you know, 1,000, if not tens or 100 of 1,000 or millions of people around the world. Pretty exciting.

[00:31:56] Eric Nantz:

Yeah. I've never actually been to a developer day myself, but yet I've interacted with many people that have in the in the earlier years of the rstudio conference, and everybody was so immensely, you know, enjoying the experience. And it helps them get over that hump of package development, you know, contributing to open source. It's a friendly, welcoming environment. Yeah. Like I said, I've heard great stories from many many in the r community of all types of experience levels getting such tangible benefits and, of course, helping open source along the way. So I highly recommend if you have the capacity to join join that effort as well.

And, yeah, as we're recording, Mike, I'll do one little mini plug here. We are only a couple of days away from the 2024 Shiny conference hosted by Absalon starting this Wednesday. Hopefully, by the time you're hearing this, it'll still be a day or so left and you can still register. We'll have a link to the conference site itself if you haven't registered yet. I am thrilled to be chairing the life sciences track, and I'll be leading a panel discussion about some of the major innovations in life sciences these days are shiny. I'll be joined by Donnie Unardi, Harvey Lieberman, and Becca Krause. They are an all star team, if I dare say so myself, of practitioners in life sciences that are pushing Shiny to another level, and I'll be thrilled to, you know, dive into some of these topics with them. You know, super exciting that Shiny Conf is this week, we are looking forward to it. It's it's finally arrived. We have a little app showcase,

[00:33:27] Mike Thomas:

that's taking place on Thursday that we're excited for, but mostly excited for all the other fantastic content that will be, presented during the conference. So really appreciate Absalon putting that on, I think, with some help from Pazit and others as well. Of course, we hope that you enjoyed this episode and also our weekly

[00:33:46] Eric Nantz:

is meant for you in the community and is powered by you in the community. The best way to help the project is to send your favorite resource or that new resource you found. We have a poll request away. It's all available at rweekly.org. Click that little right hand ribbon in the upper right. You'll get a link to the upcoming issue draft. All marked down all the time. You know marked down. I'm sure you do. If you haven't, it'll take you maybe 5 minutes to learn it. And if you can't learn it in 5 minutes, my good friend, Eway, will give you $5. Just kidding.

He did tell me that one. So, luckily, I didn't need I didn't need 5 minutes to learn it, though. Oh, I love it. I love it. And, of course, we look forward to you, continuing listening to this very show. We love hearing from you as well. We are now about two and a half weeks, I believe, into our new hosting provider, and everything seems to be working smoothly again. But, I'm really excited for the directions I can take this platform in the future. In fact, I'm hoping I can share some really fancy stats of all of you based on some of the new, back end stuff I've been able to integrate with with this new provider. But, nonetheless, we love hearing from you. You can get in touch with us with the contact page, direct link in this episode show notes. You can also send us a fun little boost along the way with one of these modern podcast apps you may have been hearing about.

We have a link to all those in the show notes as well. And, of course, we love hearing from you directly on our various accounts online. I'm mostly on Mastodon these days with at our podcast at podcast index.social. I'm on LinkedIn as well. Just search my name. You'll find me there. And, occasionally, on the weapon x Twitter thingamajig@drcast. And, Mike, where can our listeners find you?

[00:35:29] Mike Thomas:

Yeah. You can find me on Mastodon as well at [email protected], Or, you can check out what I'm up to on LinkedIn if you search for Catchbrook Analytics, ketchb r o o k.

[00:35:43] Eric Nantz:

Awesome stuff. And, again, Mike's always got some cool stuff cooking in the oven, so to speak. So it's always great to see what you're up to. And with that, we're gonna put this episode out of the oven. We are done for today. We're gonna wrap up this episode of our weekly highlights, and we'll be back with another edition next week.

Hello, friends. We're back with episode a 161 of the RWKUHollwitz podcast. And depending when you're listening to this, you might get this a day earlier than expected because yours truly has the, day job going on. We have a couple on-site visits, but, nonetheless, we're gonna get an episode to you very quickly here. My name is Eric Nance. And as always, I'm delighted that you join us from wherever you are around the world. And I'm joined at the hip as always by my awesome co host, Mike Thomas. Mike, how are you doing today? Doing well, Eric. Happy tax day for those in the US.

[00:00:33] Mike Thomas:

Having a pretty exciting day today because we're bringing on a new team member at Catch Brook Analytics, and if you're a small team, you know how big of a deal it is to to bring on an additional person. So very, very excited about that. But, yeah. Looking forward to a great week.

[00:00:47] Eric Nantz:

Congratulations, Mike. I know a lot of stuff goes on behind the scenes to make those those acquisitions happen, so to speak. And sounds like you're you're gonna be teaming up quite well. And as always, if you wanna see what Mike's up to, yeah, I saw that in his LinkedIn feed. So definitely check that out. Thank you. Nonetheless yep. Nonetheless, we are gonna get on the business of our weekly here, and we our issue this week has been curated by Tony Elharbor, another one of our longtime contributors to the project. And as always, he had tremendous help from our fellow our our weekly team members and contributors like all of you around the world.

And as you recall, Mike, this is something we talked about last year, I believe, is that there was a pretty big splash across the entire tech sector with large language models and artificial intelligence. And the Pazit group themselves had made a big splash when they announced how they were integrating chatgpt with their RStudio environment. And that's just one piece of the integration here because there is a lot more details, as they say. And that's the subject of our first highlight, which is all about the chatterrpackage, which is coming from the author of this post as well as the author of the package, our posit software engineer, Edgar Ruiz. And he tells us all about how you can chat with AI and large Angular models directly in RStudio itself via the Chatter package.

Then on the 10, the easiest way to interact with it is once you install the package and you link it to the model of your choice, and more on that a little later, you will, by default, be able to bring up a handy little shiny gadget in the panel of your rstudio IDE. And it will look very familiar to those who have used things like chat GPT and other models online. You give it your prompt, hit enter, and then you're going to get some feedback back. And it does do some nice things under the hood that's tailoring it to, say, getting, say, our code for maybe you're developing a package or you're new to a certain package and you want a little snippet. It'll make it super easy for you to copy paste those snippets directly into your source prompt and actually going the other way as well.

Perhaps you're like me, and you like the right markdown for all your expirations of, like, your data analysis and exploring a package. Maybe you write that question in your markdown file itself. And also, the Chatter package also has some nice interactions with the Rstudio add in feature where you could highlight that, say, text. That would basically be a prompt if you, like, manually typed it in. Send that directly to Chatter, and then it'll act as if you just prompted it directly. So nice little bidirectional communication on that front as well. So very nice kind of user experience on that front.

Now the parts that I've been pretty intrigued by, especially as this technology starts to hopefully get more mature, although it still seems like moving at a breakneck pace, is that across multiple industries, across many of the people I listen to, there is a lot of interest in, you know, having the ability to self host your large language models and, hence, potentially being able to leverage the same technology without it leaving your company's firewall. And in my industry, that is a big, big deal because, we could get in big hot water if we if we transferred some confidential stuff in the OpenAI suite just on its own.

Well, that's where Chatter has probably my favorite feature is the ability to integrate with not just the OpenAI type models of Chat, GPT, and the like, but also self hosted models. In particular, they give first class support for the chat LOM models, I believe. And some of these are coming from Meta themselves. And in fact, in particular, it's called Wama GPTJ chat. Again, some of these are being contributed by Meta and their research group. But with that framework, you can plug in different models that you can download that are freely available. They talk about WAMA itself, GPTJ, Mosaic pre chain transformers. Some of these I'm very not familiar of, but the whole idea is that once you install these on your system, you just set a little configuration in the Chatter package to map to those models.

And then lo and behold, you'll be able to communicate directly in your network or even on your your computer itself without it again leaving the confines of your firewall or your own setup to go to another service. I do think that as this technology, yeah, gets more mainstream in industry, having that flexibility is going to be immensely important. And I'm very happy to see that even this early version of Chatter is bringing support for the local model execution, and they do encourage others to give it a try. And if there are models that they've seen online that are not supported yet, they are inviting, you know, issues to be filed on their issue tracker. And I'm sure Edgar and the team would love to pursue that further. But overall, certainly, as developing your R code, developing your package or data analysis, having a helping hand is never a bad thing. And Chatter gives you the flexibility of going straight to OpenAI or straight to your local system instead. So we're really happy to see it and can't wait to see where it goes.

[00:06:26] Mike Thomas:

Yeah. Eric, this is super exciting and I'm glad that you brought up the the point about the need for leveraging, sort of in house LLMs. You know, I think that in sort of non corporate settings, a lot of the times, right, or if you're just looking for help in your code, potentially, you know, you can leverage something like OpenAI or some of these these models where you are sending information to a third party service. Essentially, anything that you type is is probably gonna be captured and and used to retrain that model, if not for other purposes. And and, you know, that's okay a lot of the time. But if you are asking a question that's sort of specific to, you know, the the IP of your own organization or company, right, it's probably not a very good idea to do that. Your, your your information security team is probably not gonna be too happy if you start doing that.

So the fact that we have the ability to integrate here in our studio with some of these, you know, sort of, in house LLMs, you know, that that are open source and we can install and host ourselves is fantastic. I wasn't necessarily familiar with all of them. I do know that the Mosaic, pre trained Mosaic is a company that I believe was, you know, leading in this space and they were acquired by Databricks not too long ago. Then, I guess, the one other thing that I do wanna point out is if you are, someone who's sort of like me, who's been using Versus Code a little bit more, lately than RStudio, that's okay. You don't have to just be in the RStudio IDE. You know, this article highlights Chatter's integration with with RStudio's IDE.

Specifically, you know, all the screenshots are sort of Rstudio native, but, you know, this will all work outside of Rstudio as well, you know, for example, in the terminal. So it's a great walk through on on getting set up and and getting started. I think it's it's hugely helpful, you know. I have sort of mixed feelings as I'm sure you do about Eric. You do, Eric, about large language models and their utility. You know, I think I was probably a little bit more pessimistic about them when they first came out and I think they're growing on me a little bit to be honest. 1 I listened to a great podcast this weekend, that was with the chief operating officer of GitHub.

And long this is just sort of a side story, but I live in this this very rural farm town in Connecticut as you know, Eric. Not much around. We're not we're not close to to any cities or anything like that. And the chief operating officer of GitHub lives 4 minutes away from my house and I never knew. Wow. In a small world. I sent him a little LinkedIn message to see if we're gonna get together sometime but I haven't heard back yet so I I don't blame him. But, no. It was very interesting and one of the points that he was talking about which I hadn't necessarily considered before is you know we talk a lot Eric about you know maintaining open source software and how it can be thankless sometimes. Right? There's not a lot of payoff there. But he was thinking about a world where, you know, somebody opens an issue in your, you know, open source repository that you have and maybe, you know, Copilot or or some sort of LLM is able to immediately show you some code to solve that issue. And obviously, you can go in and edit it, but any time savings that we can provide to open source maintainers, I think could be a huge benefit to the whole community and especially, you know, the many folks that work on open source that really don't get much back for it.

So I I thought that was interesting. They're growing on me. I I love seeing this article come out because I I think that this integration is very timely and looking forward to continuing to watch, you know, how this space evolves.

[00:10:03] Eric Nantz:

Yeah. Certainly, I've been pessimistic just like you have, and I do think depending on the context, there there are ways that if you're expecting too much, it's not you're gonna be you're gonna be disappointed if you think it's gonna solve all your woes of development and also coming up with a magical algorithm to save your company's bottom line. Yeah. Yeah. We're not there yet. But, however, I can definitely see the point of bridging that gap between doing so many things manually, such as looking up the concepts of a different language as you're folding maybe a custom JavaScript pipeline or custom, like, Linux, you know, operation into your typical data workflow.

Having that helping hand to be able to guide you with additional development best practices or snippets that get you started quickly, I think, is still the the the type of low hanging fruit that I think cannot be understated how valuable it is to get that extra helping hand. I think over time as these models get, you know, more updated, more trained, hopefully, with best practices for training those that we're all going to benefit from. But again, to me, the flexibility to be able to pivot to the direction that you choose, I think, is is definitely paramount here. And, yeah, for you Versus Code fans, guess what? The Versus Code, extension for R does indeed have support for RStudio add ins. So you should be able to use Chatter kind of out of the box even in your Versus Code setup. I haven't tried it yet, but I've been able to use add ins in the past with things like Logdown and other packages that had these nice little add in integration. So, yeah, who knows? Give that a try, listener out there, if you're a Versus Code power user because, I would imagine Chatter is gonna fit right in there.

[00:11:54] Mike Thomas:

Yeah. It's a fantastic project and appreciate, you know, all the work that a lot of folks, it seems especially at Guru Ruiz, have put into this.

[00:12:01] Eric Nantz:

Yeah. You can definitely see it. And in fact, he actually gave a a talk at the 2023 r pharma conference about some of his developments. So I'll have a link to that in the show notes. Now we told you how, you know, using things like large diameter miles can make things a bit faster for your development. Well, another thing that you want faster, especially when things go haywire, is if you're in the package development mindset and you're starting to do the right thing. If you've been listening to us and others in the community, you write your function. You know that function is important.

Make a test for that, folks. It'll save you so much time and effort in the long run. Well, you may be in situations where you're writing a function that definitely has a lot going on, so to speak. Maybe it takes a lot of time to execute. Maybe it's impact it's interacting with some, you know, API that needs a little time to digest things. And you wanna still keep in the workflow of being able to test this efficiently, especially when things go haywire. And our next highlight here comes from Mike Mahoney, a PhD candidate who has also had definitely more than a few contributions to our weekly in the past where he talks about some of his recent explorations in helping to decipher the ways to fail fast in your test with respect to custom warnings and errors.

And what we're talking about here is that typically speaking, when you have a function that's depending on some kind of user input, you probably wanna have some guardrails around it to make sure the inputs are meeting the specific needs of that back end processing. And if you detect that something's out of line, you will often wanna send a message to the user or in some cases, even a warning or an error right off the bat to the user that something is not right. They're gonna have to go and try it again. Well, maybe it is more of a warning concept or maybe they'll still get a result, but it may not be quite what they expect. And what Mike does in his example here, he has a little mock function that is intentionally meant to take a while, but he's making clever use of the rlang package to not only display the message to the user, but also attach a custom class to that message.

I glossed over this years ago when I got familiar with Rlang and CLI, but this is a handy little feature because if you attach a class to this type of message or warning or error in your test to determine if that said warning or error actually fires, instead of having to test for the verbatim text of that message that it was showed to the user, you can test for that class of a message, which might be handy if you have more than one of these in a given function. Now that on its own, he's got an example where he builds a test to do that in his test suite. But there's one bit issue that, again, if you have an expensive function you're going to run it into, is that the function is still going to try to go through the rest of the processing even if your test for that warning is successful.

Well, how do you get it so that you wanna, say, escape the hatch, so to speak, when that warning occurs? That's where the the rest of the example that Mike puts together here. He develops a nice little trick that's making use of the try catch function, which comes in base r, and it's a way for you to evaluate a function. And if you detect that there's an error, you can tell what to do in that post processing. So what Mike does in this example is he takes the detection of that warning condition in his function, which, in this case, is making a huge number with, going above the machine integer max.

And then in his try catch block, he's got, okay, he expect an error that that error being actually a warning message instead of a typical error. So he does a little clever thing here, and this is not modifying the function itself. It's modifying the test that declaration of that function. So the function itself still has that nice, succinct syntax of if the integer is too big, send the warning, carry on. But it's in the test that block that he switches it a bit and says, I'm gonna expect that error for that warning condition. And that way, that the warning does indeed output as I expect. It's just gonna get out, and then I get on with my day, so to speak.

Now this may not be a one size fits all for everybody because maybe there are cases where you're still gonna wanna test if that function does perform successfully. But I could definitely see as you're in, like, the iterative development workflow that you'll want that flexibility to say, okay. I just wanna test for my air conditioning first and then not get bogged down with the execution time in that iterative process. And then when I feel like I'm confident enough, then start building in the rest of the testing suite. Again, testing and development practices can be a highly personal thing, but I just love the use of these built in features of r to kinda make your day to day a bit easier in your testing suite. So a short example here, easy to digest, but I think it's got immense value, especially if you're in your early days of building that really

[00:17:44] Mike Thomas:

extremely clever by Mike and I appreciate him putting this example code together to demonstrate this this use case. It sort of reminds me, about a concept that maybe my Elle, Salmon was the the author behind, but just the concept of thinking about an early return as opposed to return right at the end of your function, you know, if some condition is met then, return early instead of executing all the rest of the code. So, great example here by Mike. I really encourage folks to to check out the example code. It's sort of hard to do it justice in a in a podcast. But this is really easy, base our code for the most part, a little bit of our lang and test that that you could run natively if you wanted to and and see, the time difference here that Mike expresses.

[00:18:29] Eric Nantz:

Yeah. I'm again, great nugget of wisdom here that I'm gonna be taking into my packages that I have to deal with either launching, say, a complicated model or interacting with an API that I have no control over, who maybe those engineers didn't exactly make it efficient on the back end. So if I wanna fail, I wanna fail fast, and hence, it's gonna be a nice little snippet that I can take into my dev toolbox to make it fail fast and still keep that function pretty pure in the process. And speaking of making things easy for you, again, going back into the package development mindset, you've done that awesome work. You've got that package ready to go.

And then you're at that point of, am I really ready for CRAN yet? It can be a daunting task, but there are there's been an existing resource for us in the R community for over 8 years that has been immensely helpful as another sanity check to our package, especially with respect to different environments and different architectures. Well, what we're the project we're talking about has been the Rhub project, which has been led by Gabor Saasardi, a software engineer at Pozit. I believe Ma'al Salmons also contributed heavily to this project as well. Well, they have a very big milestone right now in our last highlight here because they are officially announcing in their blog post is that they are moving to R hub version 2. And, like I said, the impact that Rhub has had on the community itself cannot be understated with respect to package development and helping authors really get to the nitty gritty of how their package is gonna work across different architecture.

And this has a rich history. As I said, this was actually a project funded by the R Consortium, which I've spoken highly about all the way back in 2015. So it has been standing up for years. Now why is this version 2 so exciting? Well, they are making major pivots to their infrastructure where a lot of this has been, in essence, self made or homegrown in terms of architectures for containers and servers. Now they are piggybacking off of GitHub actions to help automate many of these same checks and more that they've been doing in our hub over the years. And if that doesn't sound familiar, there has been another project we've been speaking highly about called r Universe that is also heavily invested in the GitHub Action route. So now that's 2 major, major influences in the development, community for r that are making a big headway here.

Now you as the end user, I. E. The package author wants to leverage this version of rhub. What do you have to do? The good news is there's always been a package called rhub literally in our ecosystem itself. You just gotta update that package to the latest version that they're announcing here. And then I believe you might have to reregister for interaction with the service. But if you've done it before, it should be a fairly seamless operation. And then the mechanism to you as the end user is exactly the same. You're gonna be able to supply or request that your package be checked in a more custom way. More on that in a minute. But we have a special configuration file that goes to your GitHub repository.

Assuming your package source code is on GitHub, it'll be like a YAML file that in your main branch of the repo and optionally any custom branch that on every push to that said branch, it'll trigger in GitHub actions these other rhub version 2 checks. So then you can check the progress on that in the GitHub actions dialogue much like you would in any other GitHub action. I've been in I've been living in GitHub actions over the years, so it's becoming much more comfortable to me, albeit still not 100% yet. But again, they're taking all the hard work for you. You just got to put this configuration in your repo and the R Hub package itself will let you do that very quickly. And like I said, there are opportunities if you don't want to host your package itself on GitHub and still want to take advantage of something like GitHub Actions, there is a mechanism, a path forward for you as well where you can leverage the custom runners that the R Hub team has put together that are hosted under the R Consortium itself as these on demand runners, which would be similar to what you saw in the previous version of RHub.

But this is useful if you want to keep your package outside of GitHub and still want to leverage these kind of checks. There are a couple of caveats, though. Once you opt into this kind of check, obviously, the checks that are being run-in this Rhub, GitHub, Org under the R Consortium are still going to be public. So if you're okay with that, great. But, that's just something to keep in mind. With that said, I do think that this is gonna help the team immensely with their back end infrastructure. And, hopefully, you as the end user will be able to seamlessly opt into this, especially if you've already been invested in rhub. It should be a pretty seamless operation. And if you're new to rhub, it sounds like this is gonna be even faster for you and even more reliable and, hopefully, you know, get you on your way with more efficient package development.

So big congratulations

[00:24:09] Mike Thomas:

to Gabor and Myo and others on the rHub team for this, terrific milestone of version 2. Yeah, Eric. This is super exciting. You know, we've talked about this before, but the the need to be able to check your package and ensure that it it works and doesn't throw no any warnings or or notes or anything like that, across multiple different operating systems and setups ups that are that differ from your own, right? Which is really sort of before this, one of the only places that you could check, your package is is fantastic and it's it's super powerful. It looks like the rhub check function is a really nice interactive function, tries to identify the git and Github repository, that belongs to the package that you are trying to check. And then it has this whole list of it looks like about 20 different platforms that you can check your package on. And you can check it against as many, I think, of those platforms as you want. Just entering into the console, sort of the the index number for each platform, comma separated, that you want. One of the interesting things, Eric, I don't know if you know the answer to this, but if I'm looking at, you know, checks 1 through 4 here on Linux, Mac OS, Windows, It's it's it says that it's going to check that package against our and then it has a little asterisk, any version. So does that mean that, deeper within your your, maybe, YAML configuration file for your GitHub actions check, you're able to specify the exact version of r that you want, or is this sort of choosing the version of r for you?

[00:25:45] Eric Nantz:

I'd imagine it's probably choosing for you, but I wouldn't be surprised if deep in the weeds you are able to customize that in some way. I just haven't tried it myself yet. Got it. No. Because that would be interesting because I have a sort of a use recurring use case where I feel like we need to check

[00:25:58] Mike Thomas:

against maybe 3 versions, you know, the the latest major release of our probably the next beta release as well that's coming and then and then the previous, major release as well because we know some folks in organizations sort of lag behind. So we wanna make sure that everything's working across, past, present, and future, if you will. So I wonder if I'll have to dig a little bit deeper into it. Maybe next week, I can could share my results on, the ability to specify particular r versions here. Anybody on Mastodon that wants to chime in, feel free as well. But, yeah, this is fantastic and, you know, there are as you mentioned, some of those limitations of your package being public. You know, if you do want to keep your package private and you have it in a private GitHub repository, you just wanna use the rhubsetup and rhubcheck functions instead of the rc submit function, and that should be able to keep all of your your code private for you and take care of that. But this is really exciting stuff, from the R Consortium and or from the R Hub team, and really appreciate all the work that they have done, to allow us to be able to more robustly, develop software, check that our software is working, and build working tools for others to use.

[00:27:16] Eric Nantz:

Yeah. I'm working with a teammate at the day job that we're about to open source a a long time package in our in our, internal pipeline and hopefully get it to CRAN. And they were asking me, yeah. What's this rhub check stuff? I was like, oh, you're asking me at the right time. They just released version 2. So we're gonna have a play with that ourselves probably in the next week or 2 and see how that turns out for us. But, yeah, we're gonna be putting that on CRAN. So between that and our universe, I think we're gonna be in good hands, so to speak, to prepare for that big milestone for us. That's exciting. And I do wanna point out that there is also it looks like a a very new, but a nice place to potentially ask questions as you kick the tire on this is a discussions board in the GitHub repository

[00:27:58] Mike Thomas:

for our hub. So definitely check that out.

[00:28:01] Eric Nantz:

Yeah. This is, again, very welcome to get that that real time kind of feedback, you know, submitted. So definitely have that. Check out the, of course, the post itself, and you'll get a direct link to it. Having this dialogue early is going to be immensely helpful to Gabor and the team to make sure everything's working out correctly and, of course, the help with the future enhancements to our hub itself. And you know what else can help you all out there is, of course, the rest of the rweekly issue. We got a whole smorgasbord of package highlights, new updates, and existing packages, getting major updates, tutorials, great uses of data science across the board. So it'll take a couple of minutes for our additional finds here.

And as I've been leveraging different, you know, data back ends for my data expirations, especially with huge datasets, I've been looking into things like, you know, of course, historically SQLite, DuckDB now, you know, Parquet. It's all it's all coming together as they say. Art Steinmis has a great post on our weekly, this issue, about the truth about tidy wrappers. It's a provocative title, but if you ever want a technical deep dive into kind of the benefits and trade offs of performance and other considerations with some of these wrappers that you heard about that say, let you use dplyr with, say, you know, relational databases, parquet files, DuckDB.

This post is for you. It is a very comprehensive treatment, lots of example metrics so you can make an informed decision as you look at the datasets you're analyzing in your particular project and seeing if it's a right fit to stick with the wrappers or to go full native with the respective database engine. So really, thought provoking read, and great post by Art on that one. And, Mike, what did you find? No. It's a great find, Eric. I found

[00:29:56] Mike Thomas:

a blog post by Hadley Wickham, announcing Tidyverse Developer Day 2024.

[00:30:01] Eric Nantz:

Woo hoo. Yeah. This is exciting. It seems like it's been a little while since they had a Tidyverse Developer Day.

[00:30:12] Mike Thomas:

On August 15th. And if you're curious about what Tidyverse Developer Day is, it's just a really open communal day of folks, sitting and learning together and coding to try to promote contribution to the Tidyverse codebase. They're going to provide everyone with food and all you need to do is is bring your laptop and your, you're yearning to learn, so to speak. So it looks like, anyone can attend if this is regardless of whether you've ever created a pull request before or as Hadley says or if you you've already made your 10th package. So we're welcoming beginners to intermediate to advanced folks. Anybody that's interested in sort of the concepts of maybe con just contributing to open source or contributing to the Tidyverse, specifically.

I'm sure that they will have, many sort of issues already labeled and and ready to go for sort of, low level beginner stuff to probably more advanced stuff if you really wanna get into the weeds of the tidyverse. It's gonna cost $10 and and they say that really that's just because, they don't want people, you know, sort of just a lot of people taking a ticket and and not necessarily showing up. They're trying to encourage, you know, some commitment to the folks that actually say that they're going to, register for this. And and I think they're looking forward to, this day. And I'm going to to try to make it if possible. I'll have to see if the logistics all line up. But this is super exciting. Just another thing that I love about open source. The fact that, you know, they're going to have a day dedicated to this, a very open sort of forum for folks to help contribute to code that's going to get used by, you know, 1,000, if not tens or 100 of 1,000 or millions of people around the world. Pretty exciting.

[00:31:56] Eric Nantz:

Yeah. I've never actually been to a developer day myself, but yet I've interacted with many people that have in the in the earlier years of the rstudio conference, and everybody was so immensely, you know, enjoying the experience. And it helps them get over that hump of package development, you know, contributing to open source. It's a friendly, welcoming environment. Yeah. Like I said, I've heard great stories from many many in the r community of all types of experience levels getting such tangible benefits and, of course, helping open source along the way. So I highly recommend if you have the capacity to join join that effort as well.

And, yeah, as we're recording, Mike, I'll do one little mini plug here. We are only a couple of days away from the 2024 Shiny conference hosted by Absalon starting this Wednesday. Hopefully, by the time you're hearing this, it'll still be a day or so left and you can still register. We'll have a link to the conference site itself if you haven't registered yet. I am thrilled to be chairing the life sciences track, and I'll be leading a panel discussion about some of the major innovations in life sciences these days are shiny. I'll be joined by Donnie Unardi, Harvey Lieberman, and Becca Krause. They are an all star team, if I dare say so myself, of practitioners in life sciences that are pushing Shiny to another level, and I'll be thrilled to, you know, dive into some of these topics with them. You know, super exciting that Shiny Conf is this week, we are looking forward to it. It's it's finally arrived. We have a little app showcase,

[00:33:27] Mike Thomas:

that's taking place on Thursday that we're excited for, but mostly excited for all the other fantastic content that will be, presented during the conference. So really appreciate Absalon putting that on, I think, with some help from Pazit and others as well. Of course, we hope that you enjoyed this episode and also our weekly

[00:33:46] Eric Nantz:

is meant for you in the community and is powered by you in the community. The best way to help the project is to send your favorite resource or that new resource you found. We have a poll request away. It's all available at rweekly.org. Click that little right hand ribbon in the upper right. You'll get a link to the upcoming issue draft. All marked down all the time. You know marked down. I'm sure you do. If you haven't, it'll take you maybe 5 minutes to learn it. And if you can't learn it in 5 minutes, my good friend, Eway, will give you $5. Just kidding.

He did tell me that one. So, luckily, I didn't need I didn't need 5 minutes to learn it, though. Oh, I love it. I love it. And, of course, we look forward to you, continuing listening to this very show. We love hearing from you as well. We are now about two and a half weeks, I believe, into our new hosting provider, and everything seems to be working smoothly again. But, I'm really excited for the directions I can take this platform in the future. In fact, I'm hoping I can share some really fancy stats of all of you based on some of the new, back end stuff I've been able to integrate with with this new provider. But, nonetheless, we love hearing from you. You can get in touch with us with the contact page, direct link in this episode show notes. You can also send us a fun little boost along the way with one of these modern podcast apps you may have been hearing about.

We have a link to all those in the show notes as well. And, of course, we love hearing from you directly on our various accounts online. I'm mostly on Mastodon these days with at our podcast at podcast index.social. I'm on LinkedIn as well. Just search my name. You'll find me there. And, occasionally, on the weapon x Twitter thingamajig@drcast. And, Mike, where can our listeners find you?

[00:35:29] Mike Thomas:

Yeah. You can find me on Mastodon as well at [email protected], Or, you can check out what I'm up to on LinkedIn if you search for Catchbrook Analytics, ketchb r o o k.

[00:35:43] Eric Nantz:

Awesome stuff. And, again, Mike's always got some cool stuff cooking in the oven, so to speak. So it's always great to see what you're up to. And with that, we're gonna put this episode out of the oven. We are done for today. We're gonna wrap up this episode of our weekly highlights, and we'll be back with another edition next week.

Additional Finds

Episode Wrapup